DM7903: Design Practice

Introduction

Across the 15-week DM7903: Design Practice module, I produced a Proof of Concept (POC) for an augmented reality (AR) mobile application. The application’s user base would be students at the University of Winchester’s Multimedia Centre who would like training to use equipment, such as digital cameras.

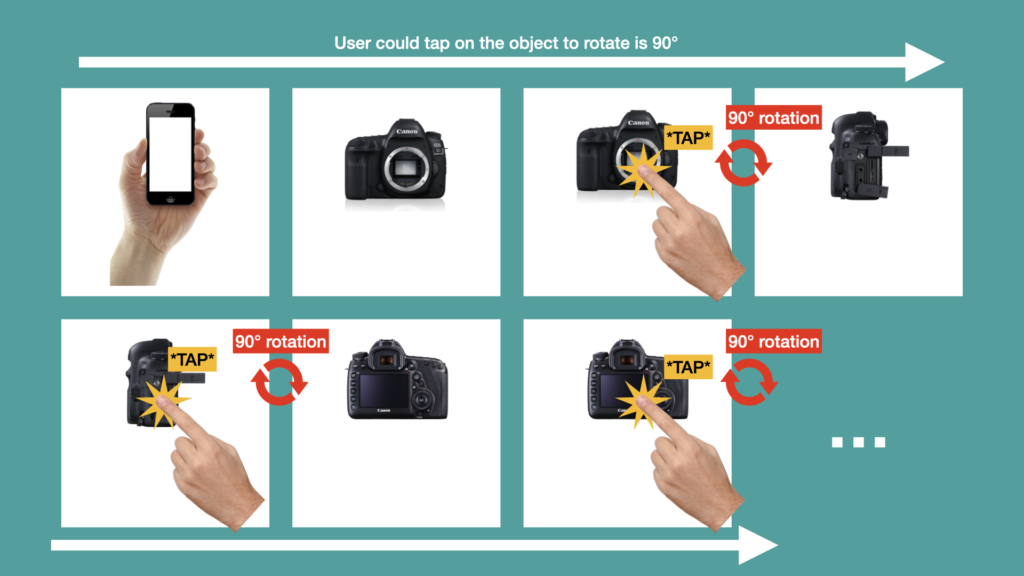

In its infancy, the application enables students to place virtual 3D models of cameras onto surfaces within the user’s physical environment. They could then reposition and rotate the cameras to view their assembly process, interfaces, and functions.

I named the mobile application “Multimedia Centre AR” (MMC AR), as the generalised name would permit scope for future expansion beyond cameras, to include lighting and sound equipment as well as exploration of TV and radio studios.

As I present this project, I will provide links to relevant blog posts I have made throughout the project’s development. Each blog post provides further insight into each milestone, alongside my own reflections upon the challenges and personal development opportunities that I experienced.

Learning Goals

Taking a holistic approach to my learning, I’ve considered how this module’s requirements have integrated with my learning goals on the MA programme. I have listed each learning goal below, then briefly explained how my activities within the module have met them.

- Research User Centred Design (UCD) Regarding Accessibility, Education and Training

- Practise Research, Analysis and Project Management Skills Required for PhD Study

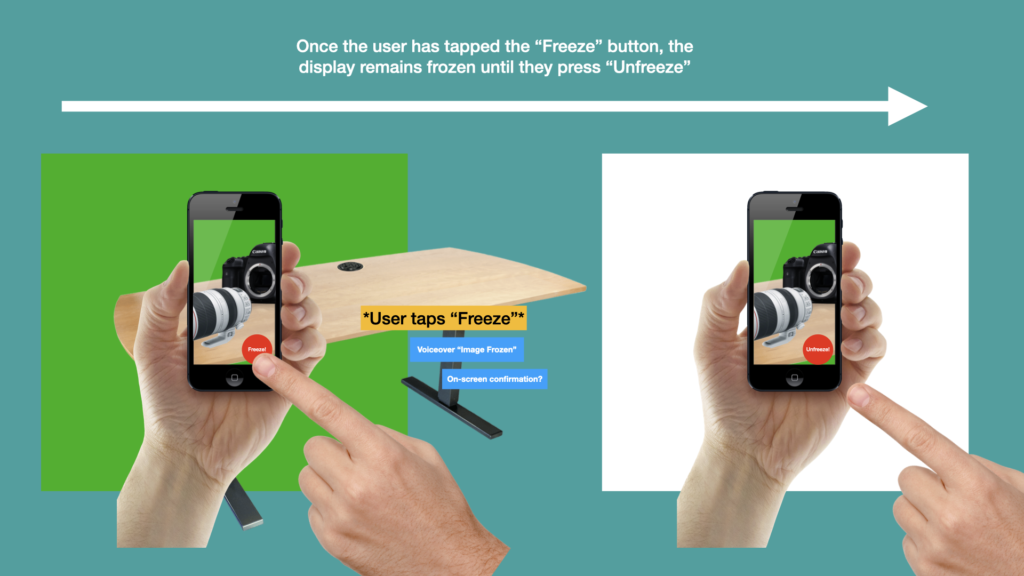

- I have researched how a variety of educational and consumer mobile applications make use of AR technologies; I considered their strengths and limitations regarding functionality and accessibility, and combined this learning with research from published academic papers on the state of accessibility in AR. Consequently, I integrated user interface features into the MMC AR application such as ‘Freeze Frames,’ reachable tab bars, and selection drawers into the application

- I challenged myself to respond to some real-world research that I had carried out in the DM7921: Design Research module. Design decisions and the formulation of a Proof of Concept were informed by empathy maps and the journey mapping process of the current Multimedia Centre training provision

- Practise Iterative Design Processes Including Prototyping and Working with Personas

- I carefully conducted a cyclical, iterative design process, beginning with sketches, wireframes, and user flows maps, which steadily evolved towards paper, medium, and high-fidelity prototypes. Along the way, a usability testing process was integrated to offer a rudimentary method of gaining and integrating user feedback.

- I carefully conducted a cyclical, iterative design process, beginning with sketches, wireframes, and user flows maps, which steadily evolved towards paper, medium, and high-fidelity prototypes. Along the way, a usability testing process was integrated to offer a rudimentary method of gaining and integrating user feedback.

- Develop Proficiency in Specialist Software Packages

- This module gave me an opportunity to further strengthen my skillset using Adobe XD into previously unknown areas such as object states, timed transitions, and integrated video clips

- 3D object scanning and augmented reality software in Apple’s Reality Kit and Object Capture API were all new and very challenging to me. Experimenting this beta software rapidly expanded my knowledge of how the UX industry is embracing these tools to enhance the scope of its projects.

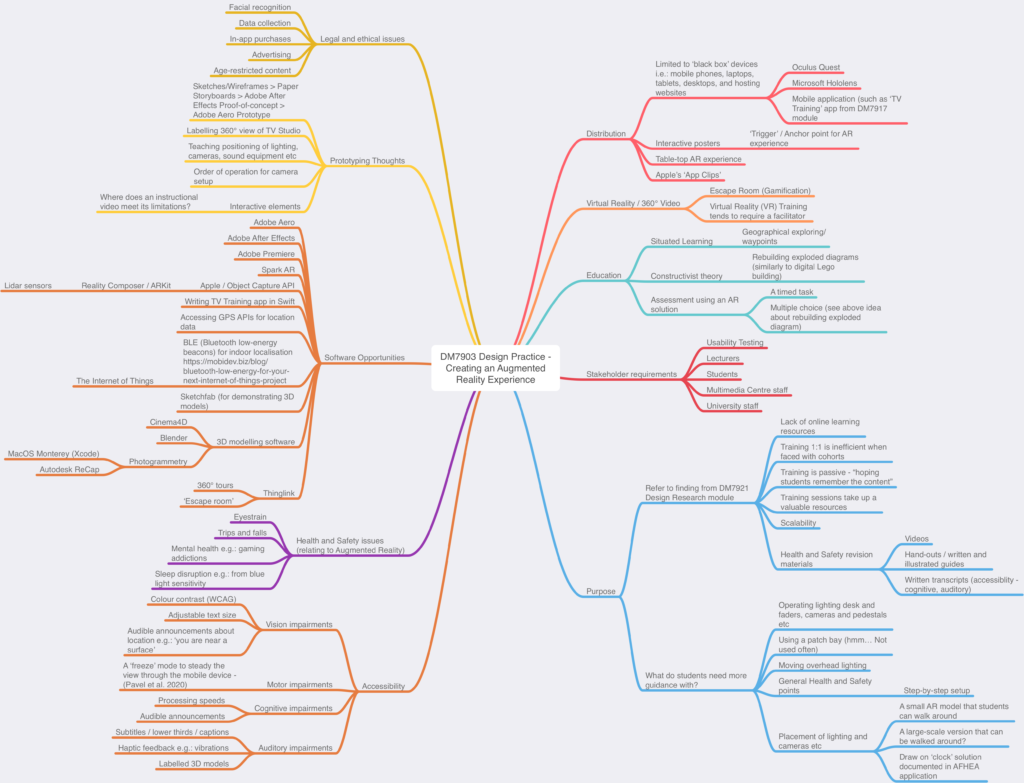

Ideas Generation and Timeline

To develop and record my initial ideas, I resolved to build a small mind map, which details how I addressed my personal interests within the module, alongside building upon some of the prototyping skills I’d attained in the DM7917 module. From this mindmap, I realised my intention to practice prototyping an AR experience within a mobile application prototype.

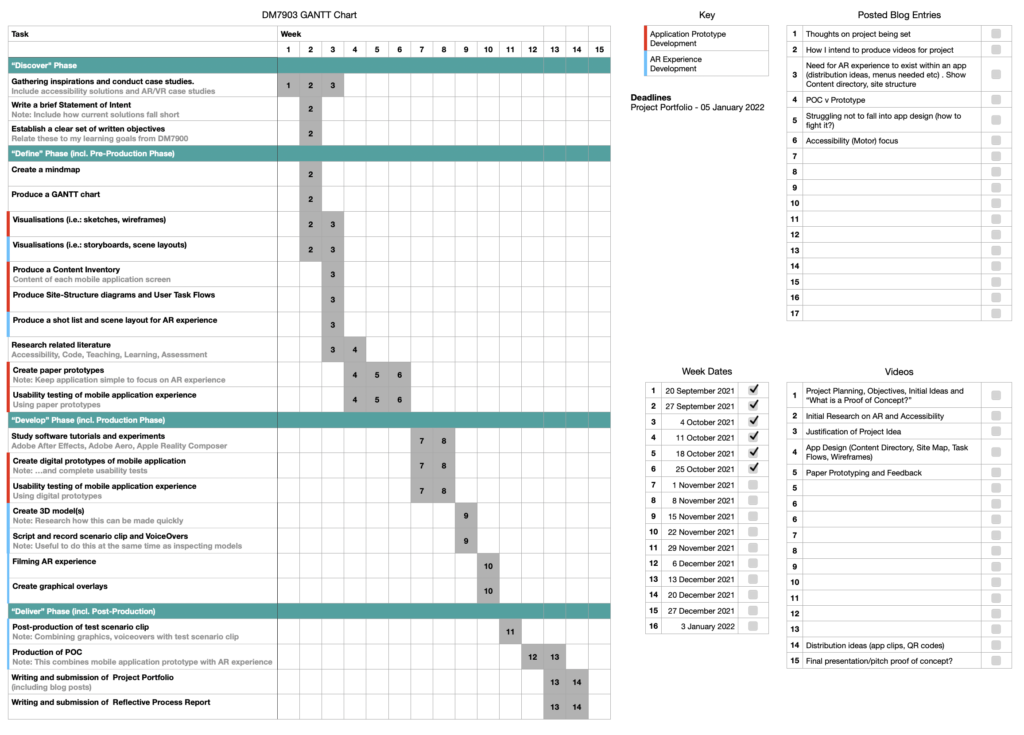

Regarding my time management, I produced a GANTT chart to identify tasks and milestones that would need to be met over the following 12 weeks. I was aware that my time planning would need to be meticulous for this module, as I was intending to prototype both an AR experience and a mobile app interdependently. Balancing my time between both of these would be critical, as I intended to marry these two avenues together in the closing stages of the module to produce a final ‘Proof of Concept’.

Initial Research and Stakeholder Considerations

The scope of my initial research was considerably large, which I found to be particularly overwhelming at times. There were many unknowns, including the existence of competitive AR experiences, accessibility considerations, and client needs such as compliance with the UN’s Sustainable Development Goals, to name a few (United Nations and The Partnering Initiative, 2020).

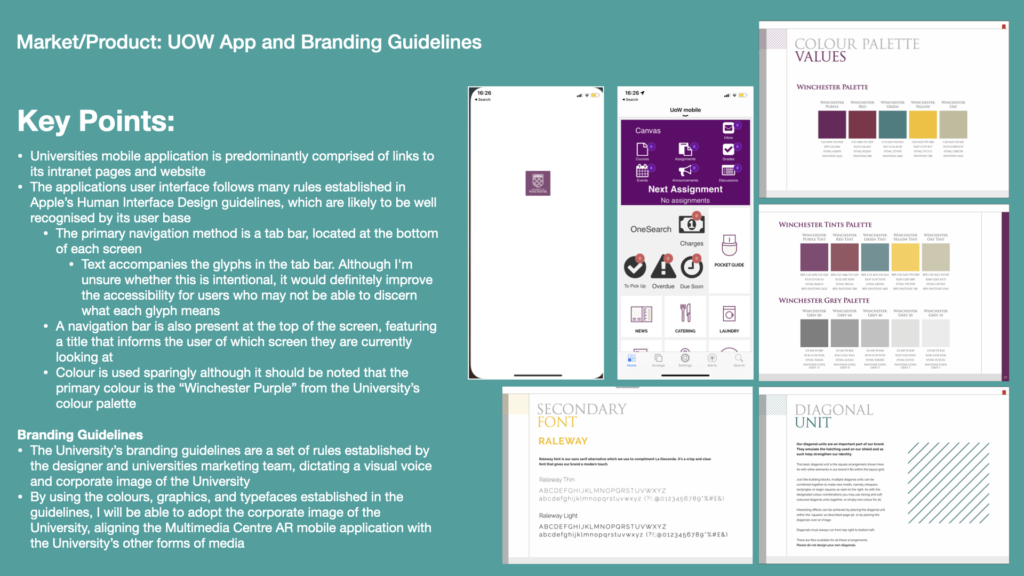

I began by researching the corporate image of my client, the University of Winchester. From the branding guidelines I could quickly build a crib sheet of relevant colours, graphics, and type faces.

Deeper research revealed that the University intends to become a ‘beacon for social justice and sustainability,’ in-part by transforming its operations to adhere to the United Nations’ Sustainable Development Goals (SDGs). As part of its guiding principles, the University has declared its intentions to become carbon neutral by 2025, and has taken steps such as eliminating unnecessary single-use plastics and ensuring it has no investments in fossil fuels (University of Winchester, n.d.).

I saw the University’s business goals as an important aspect for consideration in the design of a mobile training solution. Where possible, features such as AR-based training could be leveraged to help the University achieve these goals. In this scenario, the mobile application could enable students and staff to complete training on camera equipment without needing to travel to campus. Theoretically, this could help the University achieve its carbon neutral target.

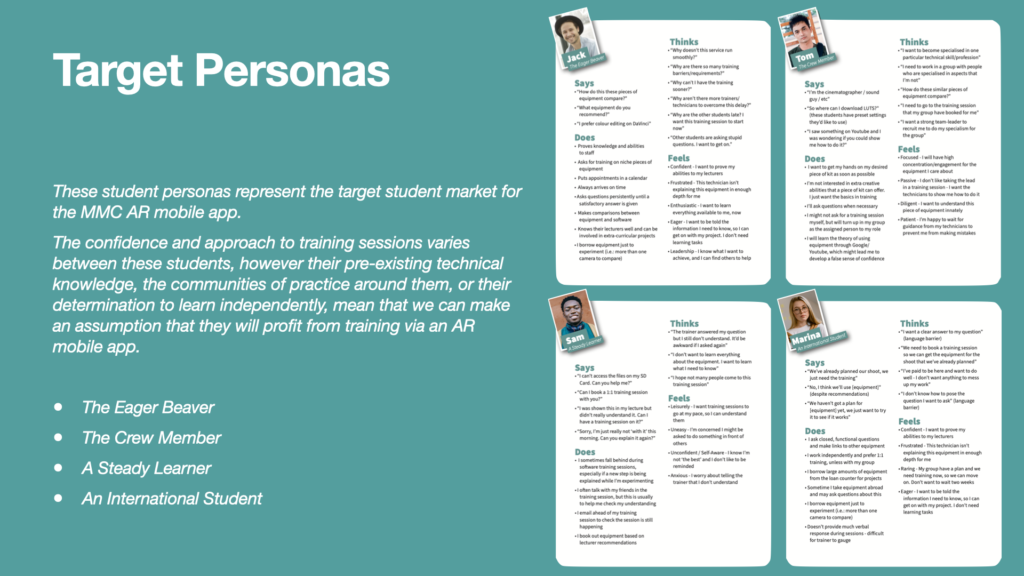

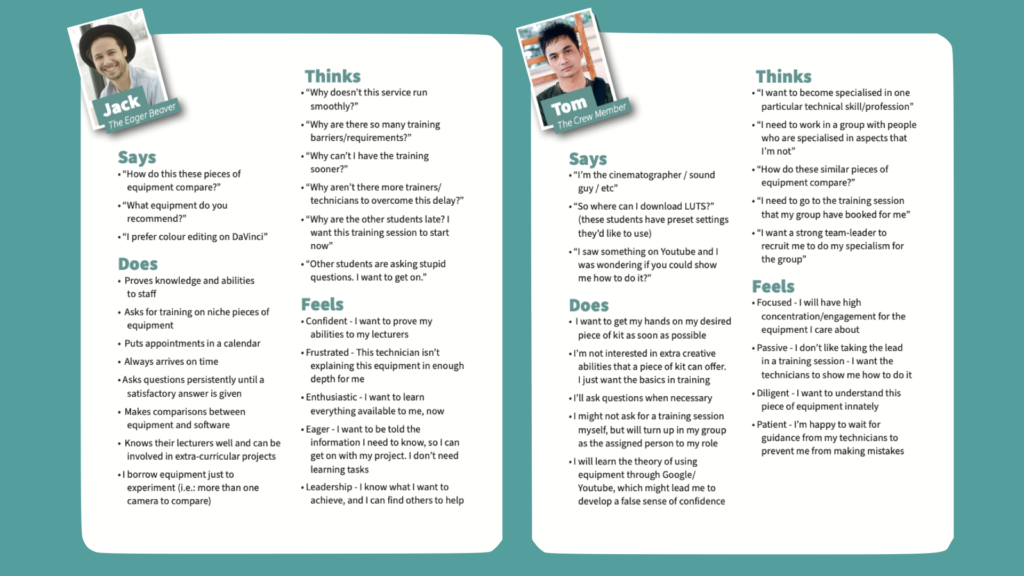

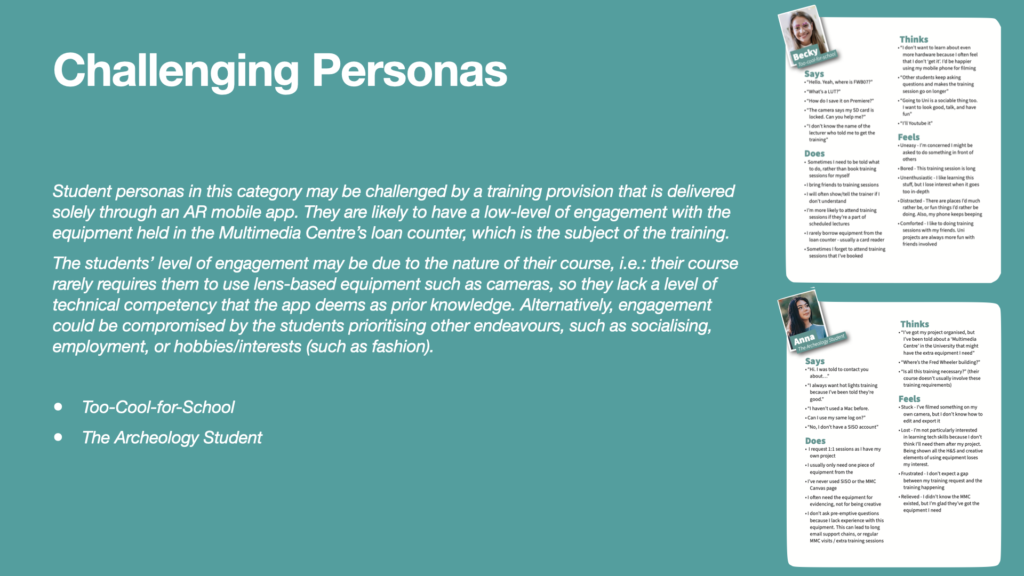

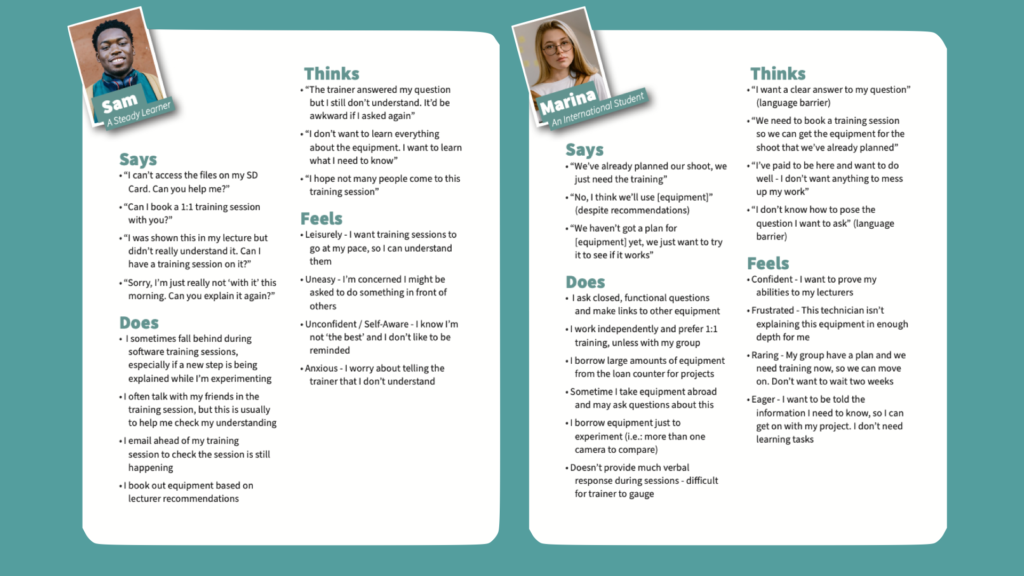

Further to these client considerations, I also needed to consider the mobile app’s end users – the University’s students. In an earlier module I had carried out design research into these users, culminating in the creation of personas through empathy maps (Helcoop, 2021). This proved to be very useful in justifying the need for an AR-based mobile training provision at the University, and so would directly inform my Proof of Concept.

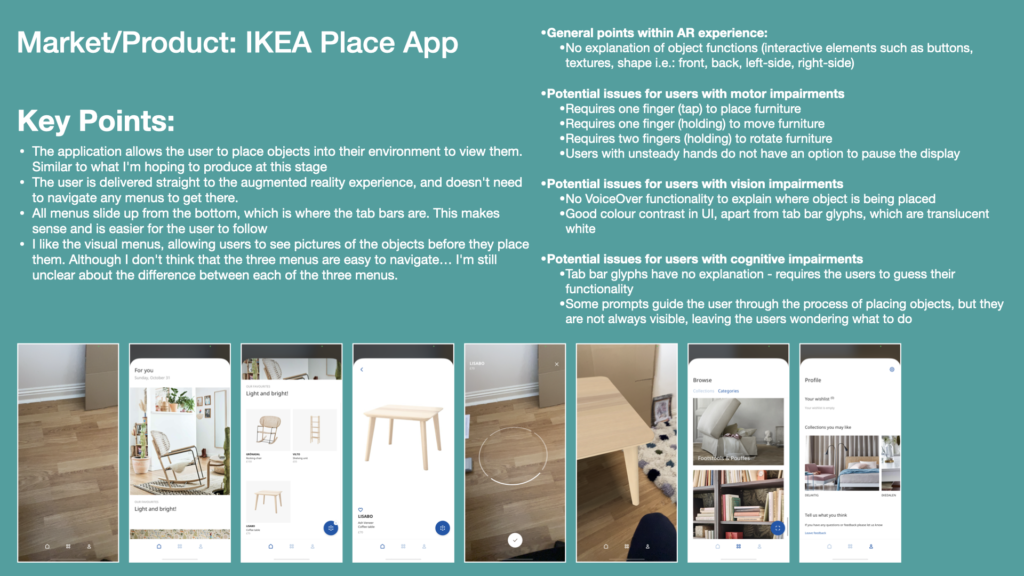

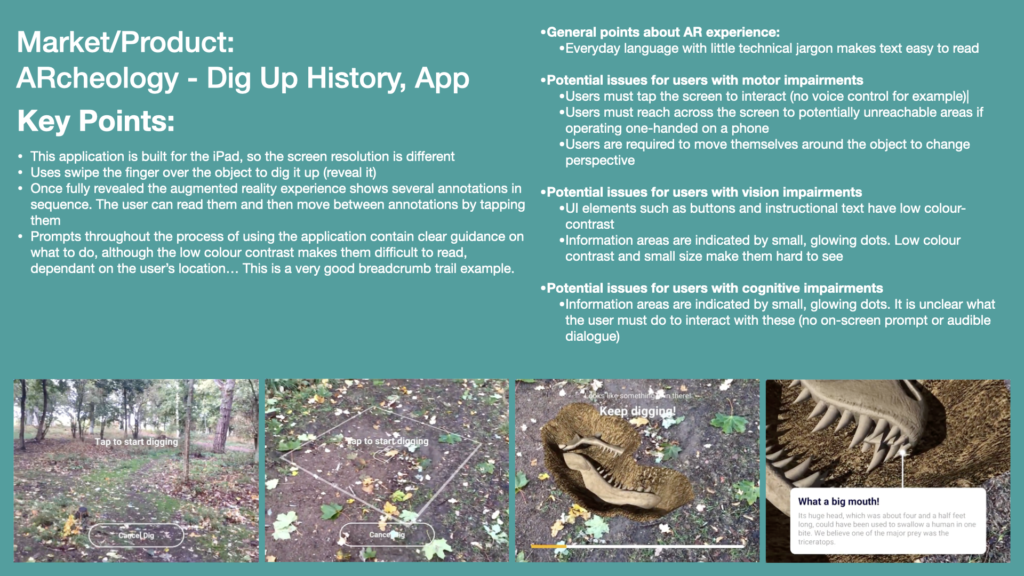

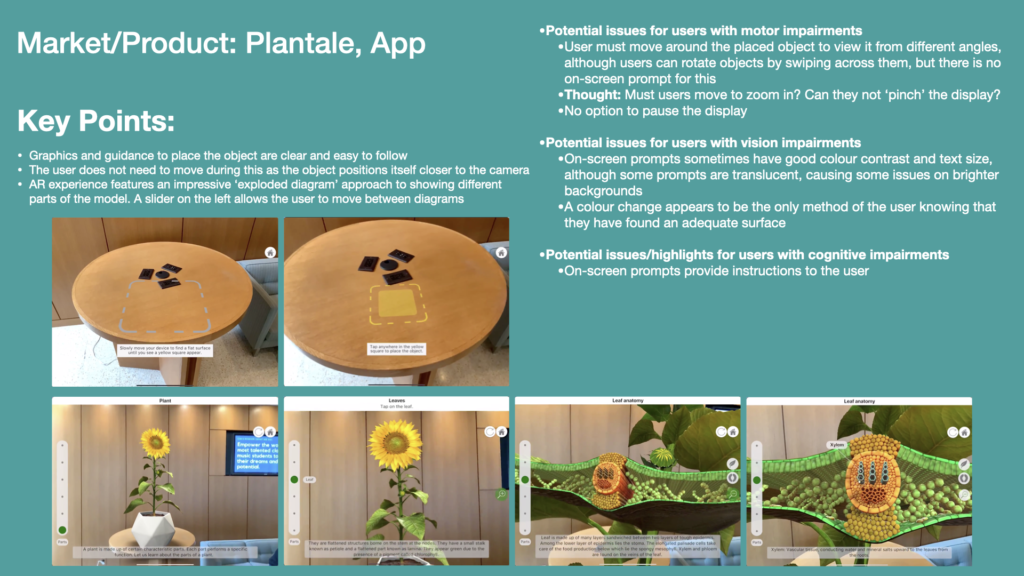

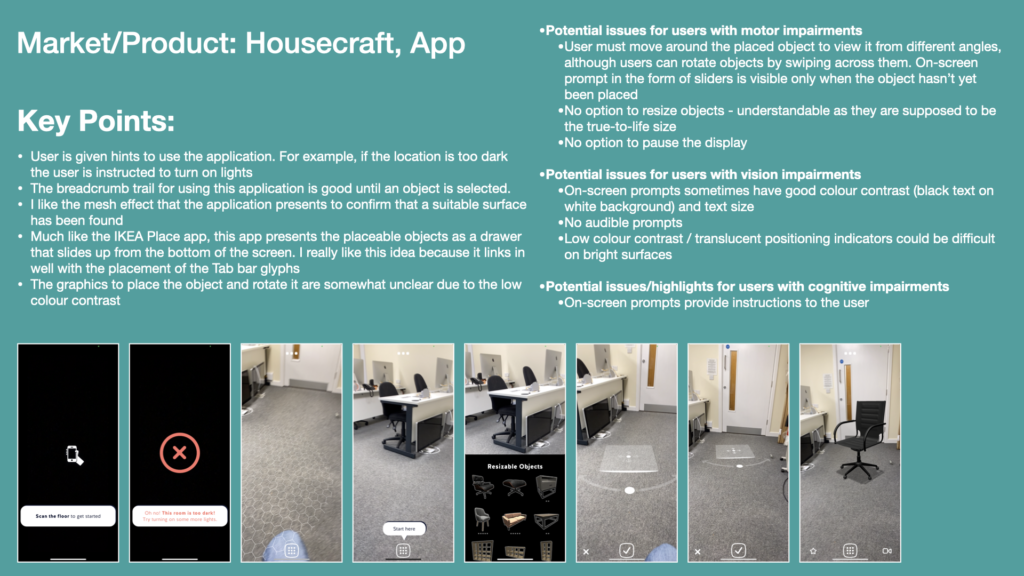

Systematically, I also research similar, pre-existing mobile applications, and compiled my findings into individual presentation slides. This method of summarising my research helped me to gain feedback from peers during informal conversations and group critiques. I could present my research on slides, which would then prompt them to criticise and question gaps in the research or suggest how I could respond to it. One example of this occurred in Week 7 when a peer identified that I hadn’t researched the ‘Piano 3D’ mobile application. She stated that it was a good example of a very complicated and potentially inaccessible user interface (UI). Consequently I researched this application and criticised its accessibility limitations, noting how I could avoid them.

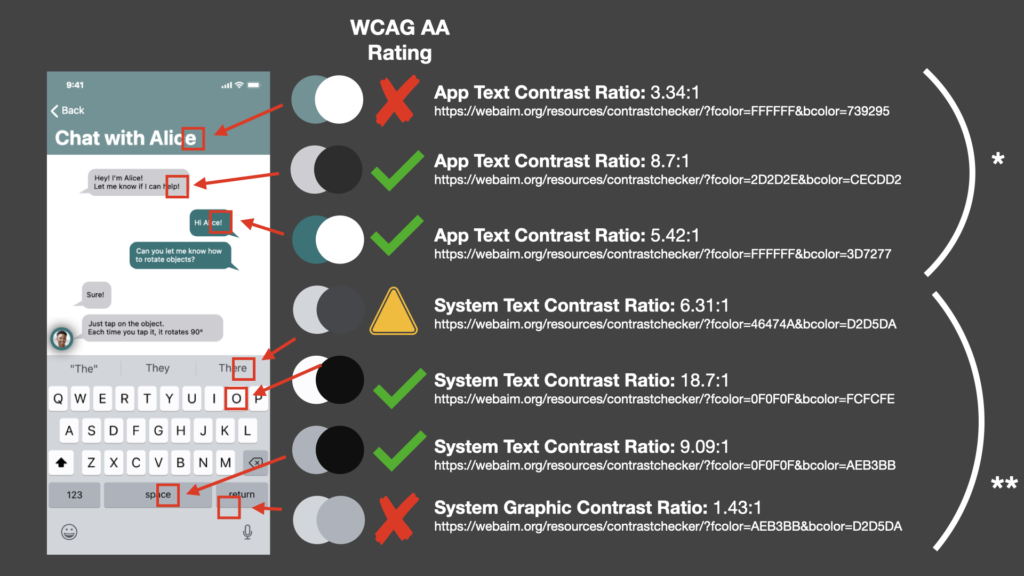

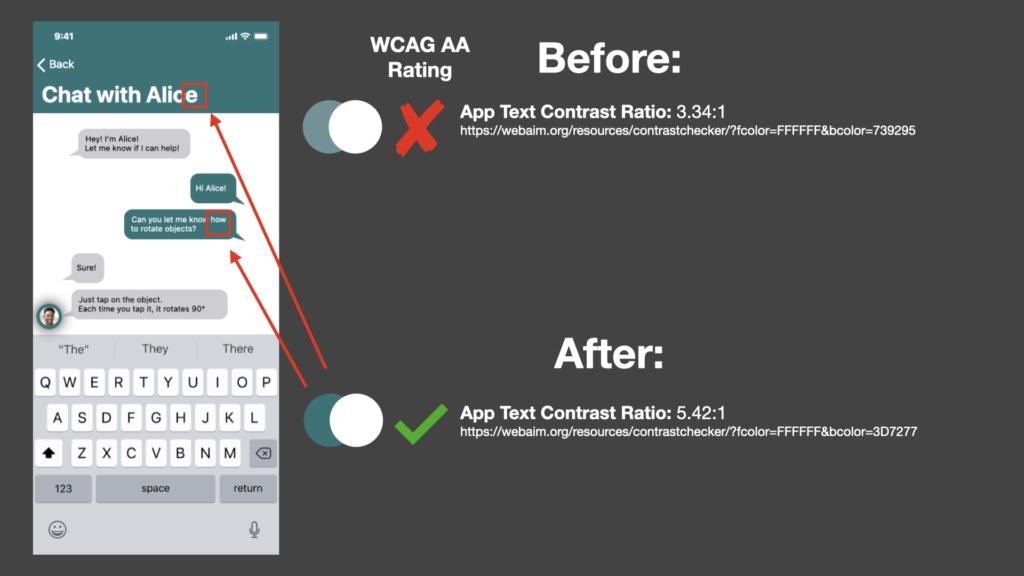

Accessibility-based research formed the largest area of research for this project. A personal intention of mine was to ensure that the final prototype had accessibility considerations built-in from the very early stages of the design process. This is detailed in my early research of academic papers in relation to accessibility considerations and AR apps, as well as my reflections in Week 6 (see Blog – Accessibility Research).

Below, you can follow the Issuu link to view my finalised Initial Research presentation.

Ideation and Early Visualisations

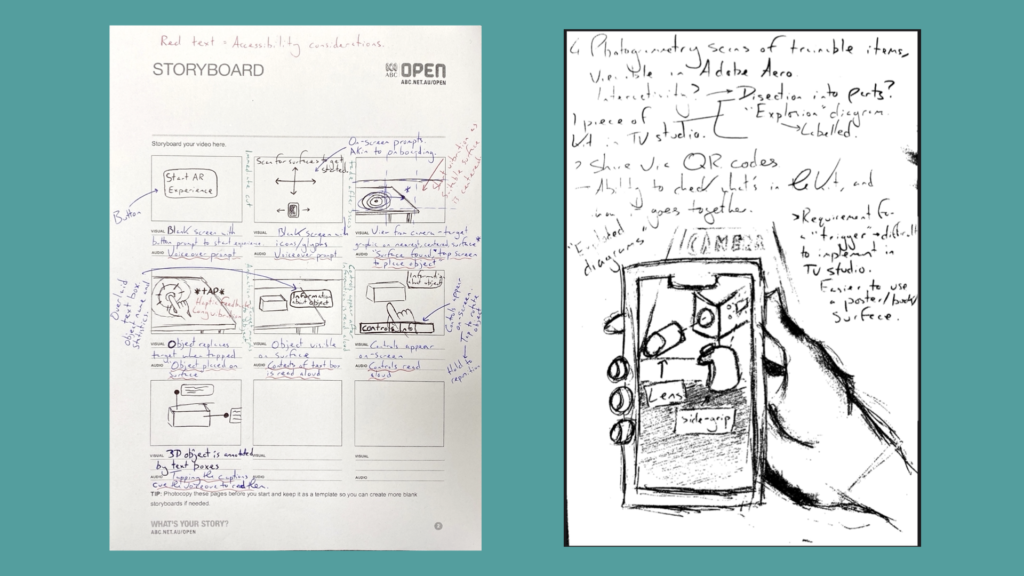

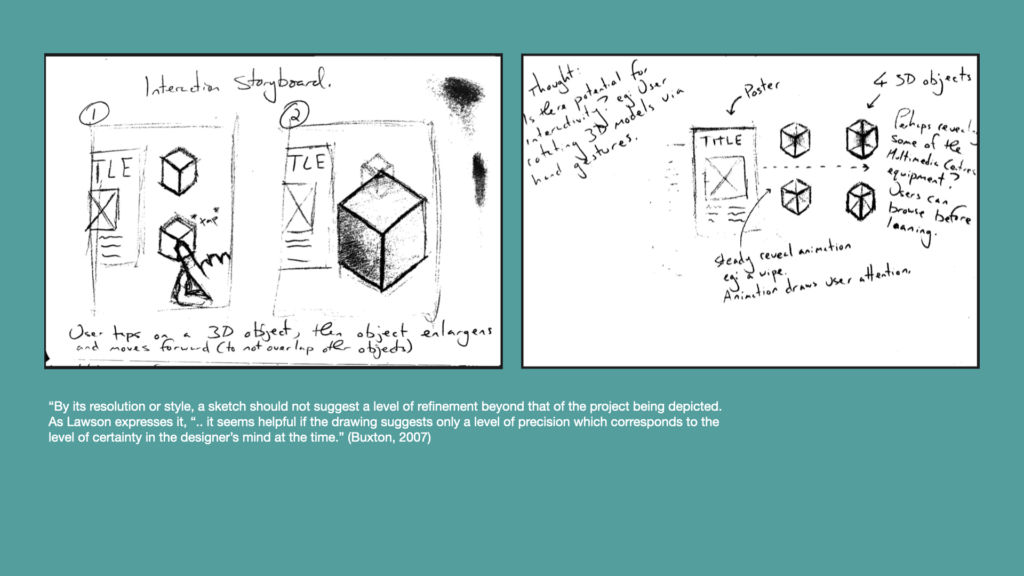

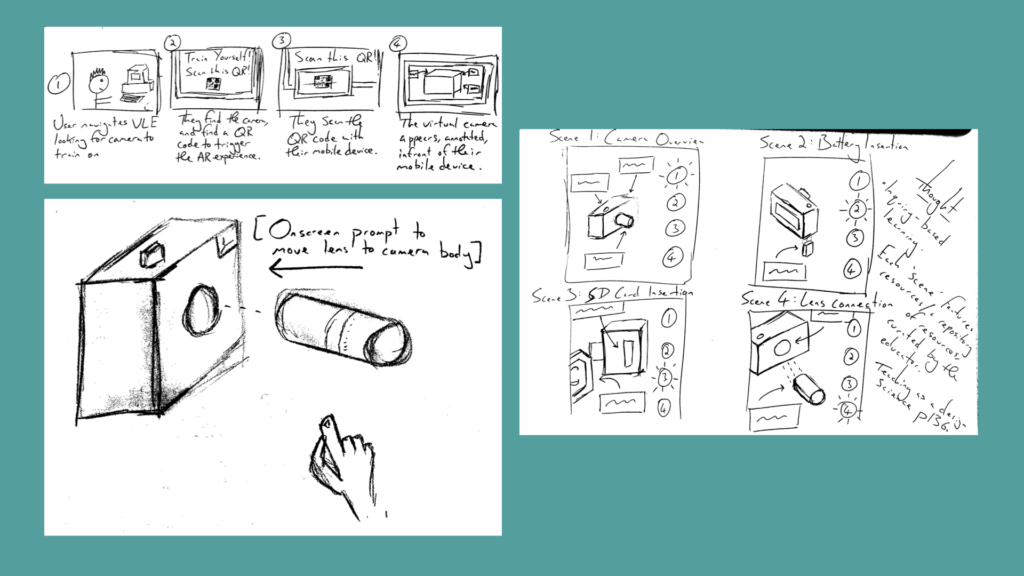

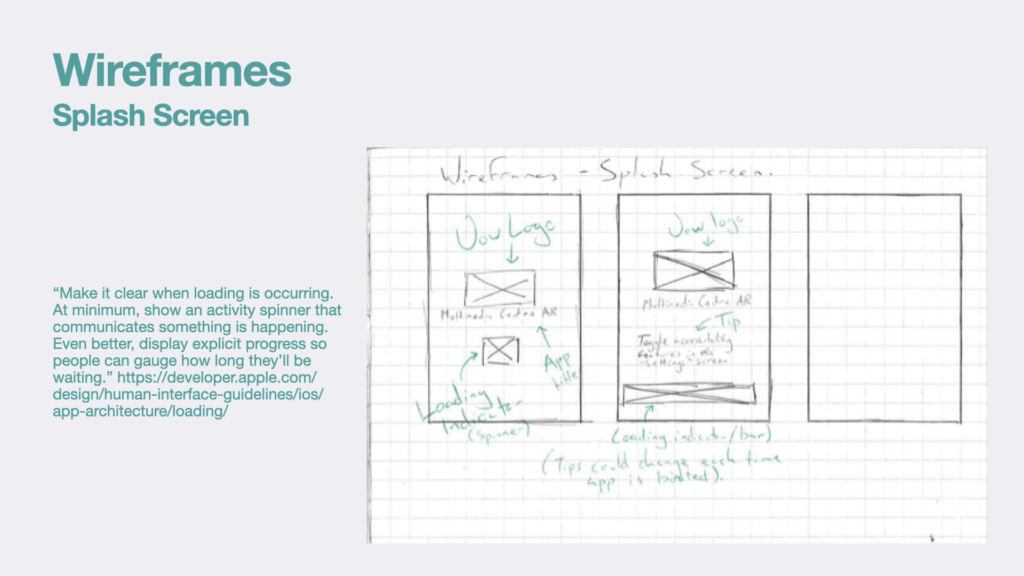

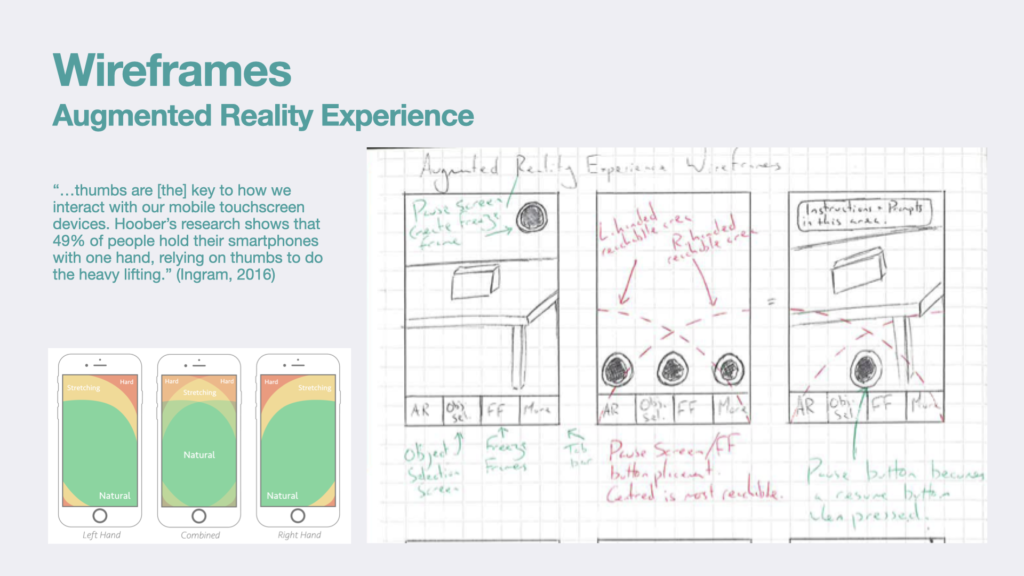

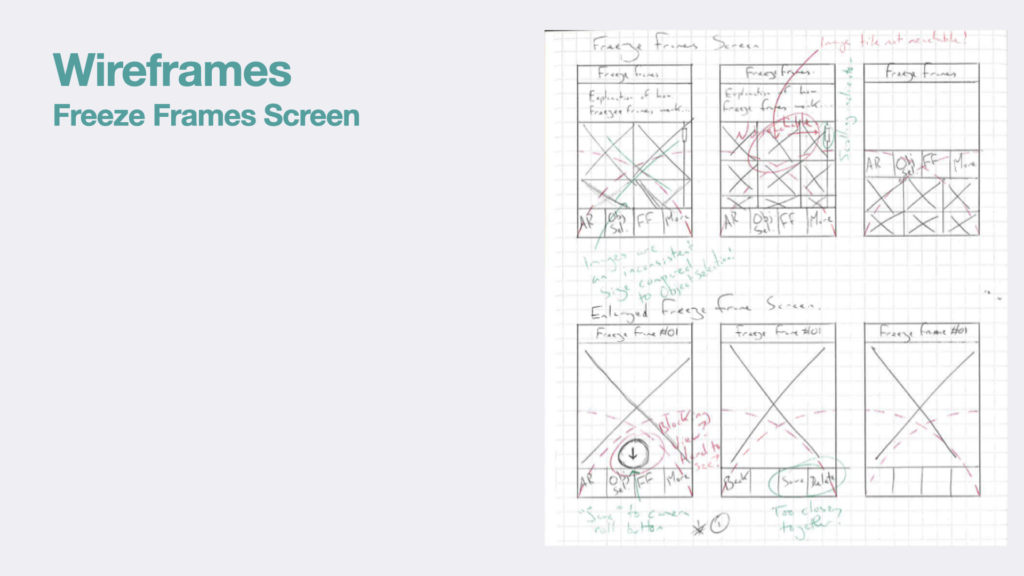

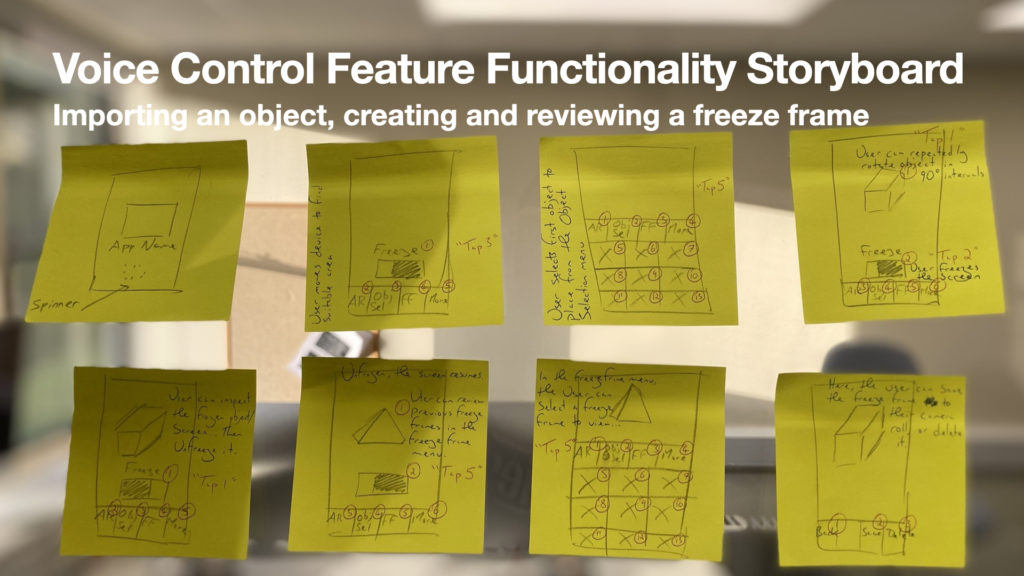

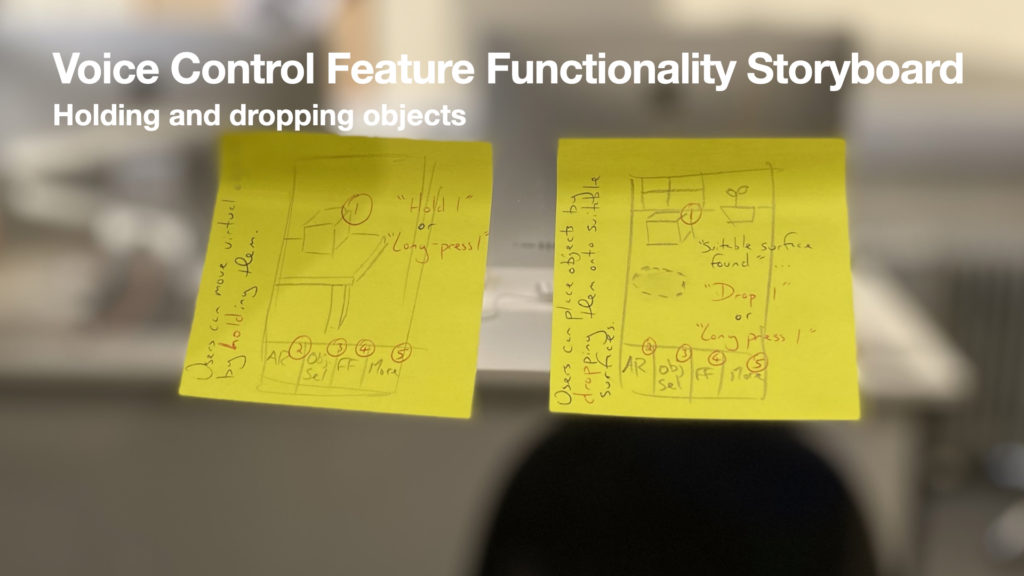

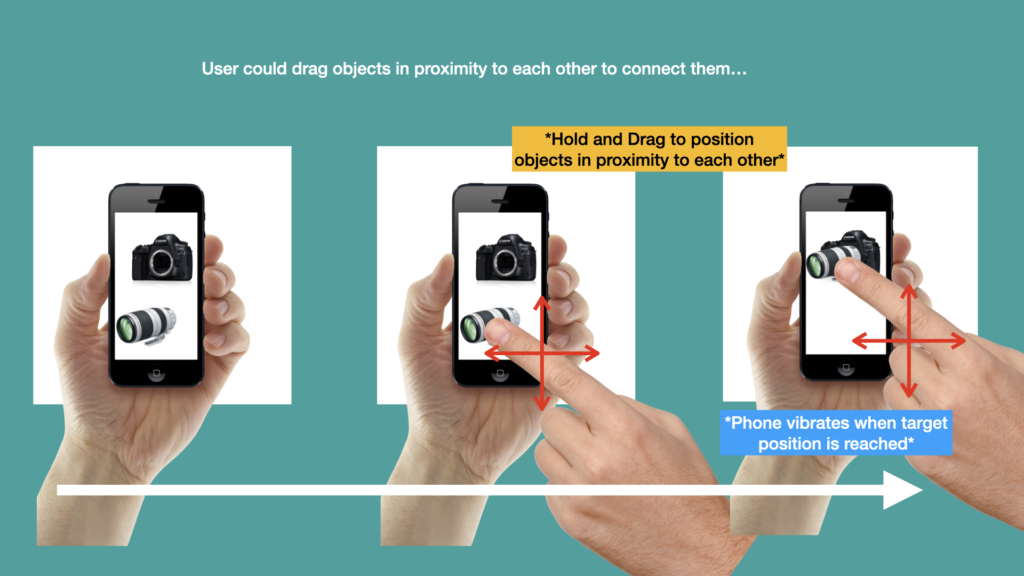

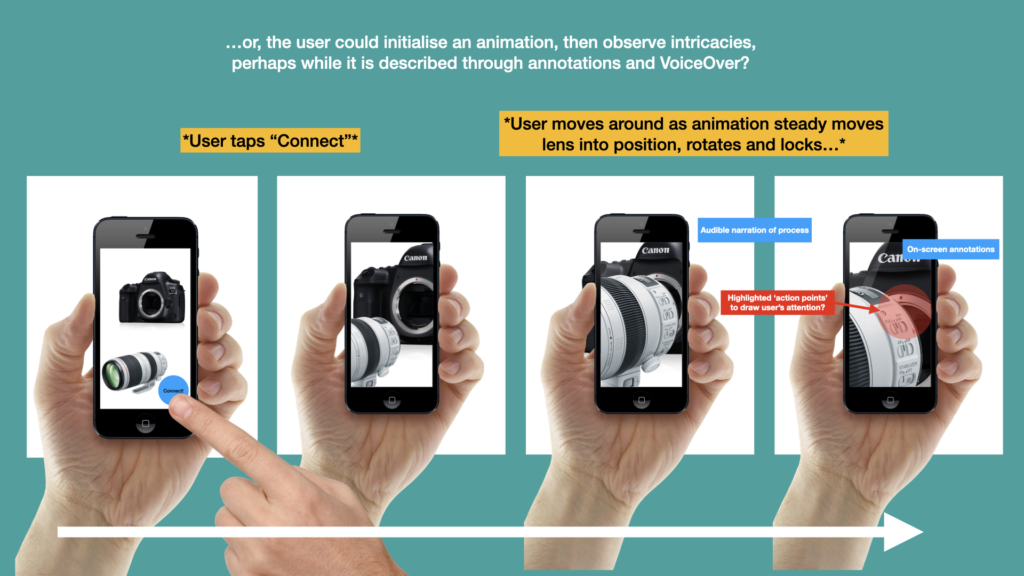

By producing a range of progressively-detailed sketches and wireframes, I could openly explore how my design ideas could combine with the outcomes of my research. These sketches and wireframes also provided a broad illustration to explain how I’d imagine a user would launch and interact with the mobile app and AR experience.

Initially, I explored the idea of creating a ‘target’ such as a poster, which could launch the augmented reality experience when the mobile devices camera was aimed at it, much akin to the ‘Paper AR’ concept explored by Andaluz et al in 2019 (Andaluz et al, 2019). However, in the interest of ubiquity I decided that I would like the user to be able to utilise the AR experience without needing any other physical objects to launch it.

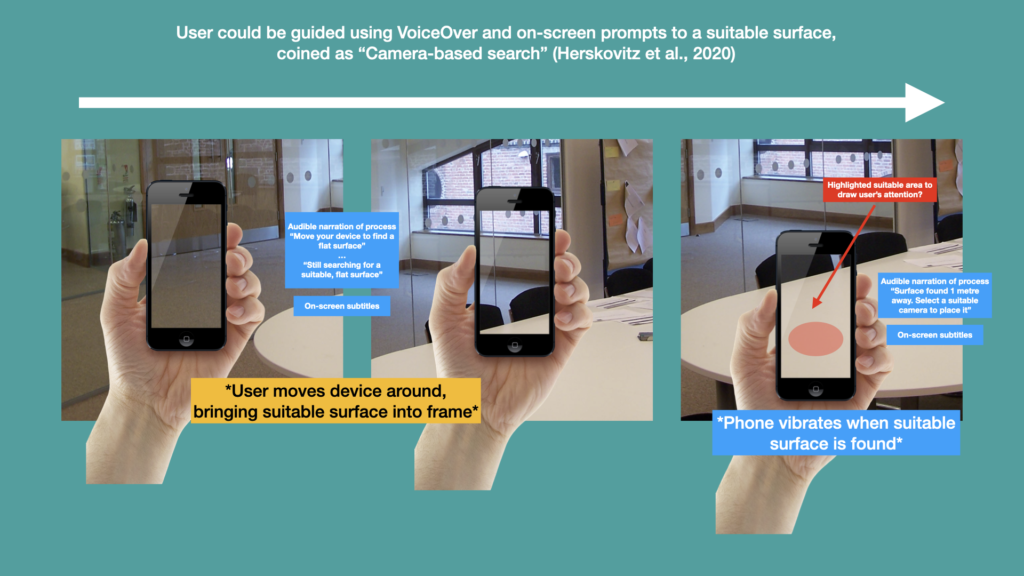

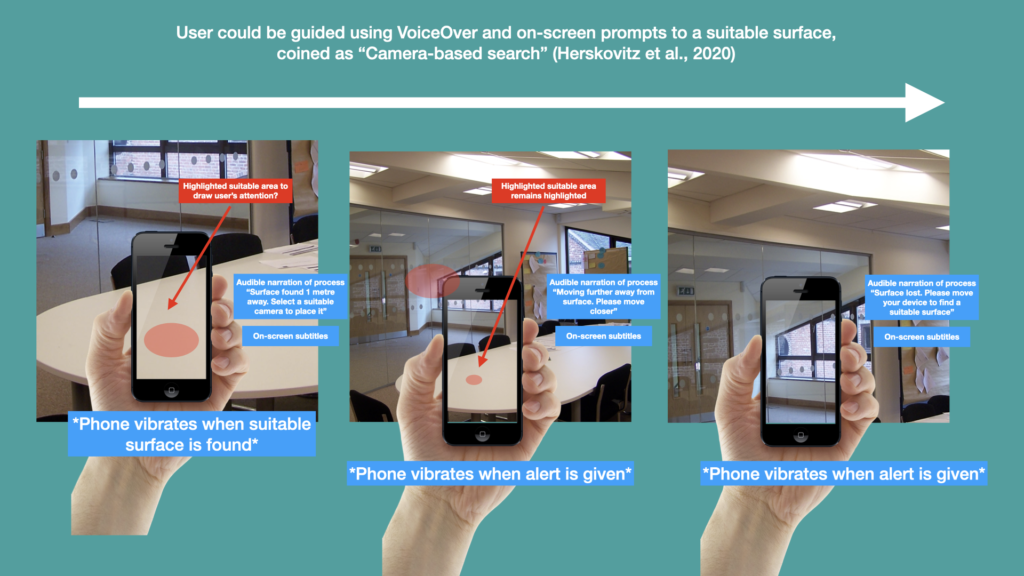

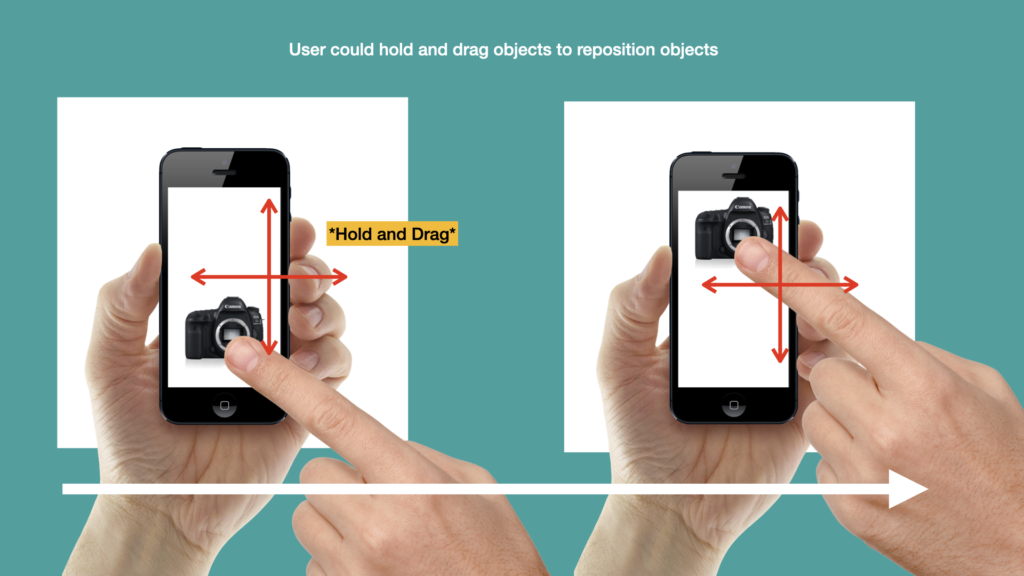

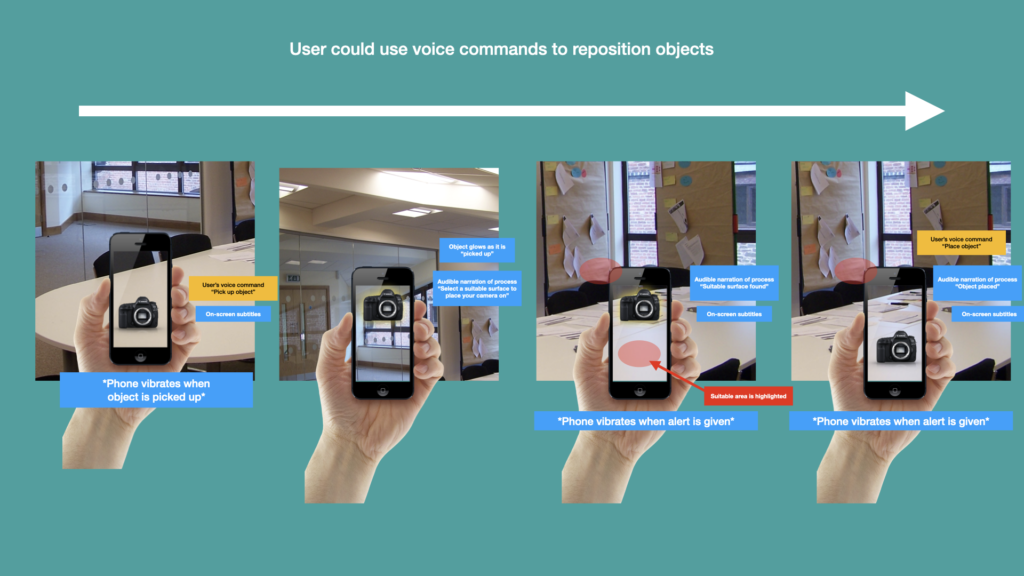

As I explored the possibilities for launching and using the AR experience, I frequently realised that every solution posed accessibility challenges. My choice to not anchor the launch of an AR experience to a ‘target’ meant that I would need to consider how users could navigate to suitable surfaces to place virtual objects upon. Users living with vision impairments might need to be guided through utilities such as VoiceOver, while users with motor impairments may struggle to provide haptic interactions that would be needed to place a virtual object and move it around. Thankfully my research into academic papers on accessibility and AR presented many theoretical solutions for making the AR experience accessible – although visualising how these would work in practice was a further challenge!

In the interest of developing my design knowledge and skillset relating to accessibility, I decided to focus primarily upon designing and implementing accessibility features for users with motor impairments. My research has showed me that accessibility was a very wide-ranging issue within design, which would be too much to comprehend in one 12-week project. I moved forward by mapping common motor impairment symptoms to the planned accessibility functionalities included in my early visualisations. By categorising these symptoms I could cater for a wide scope of motor impairments using only three application functions.

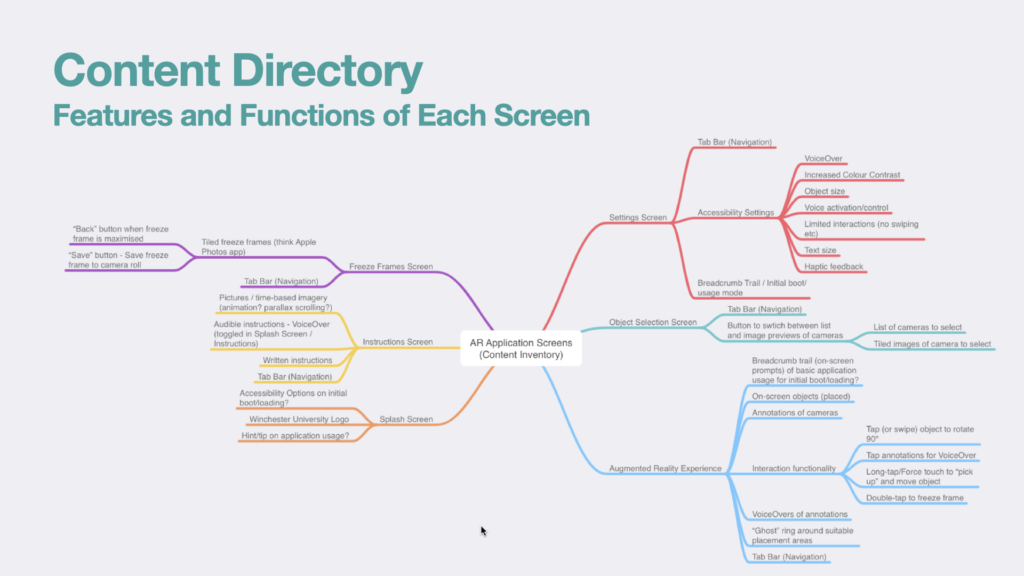

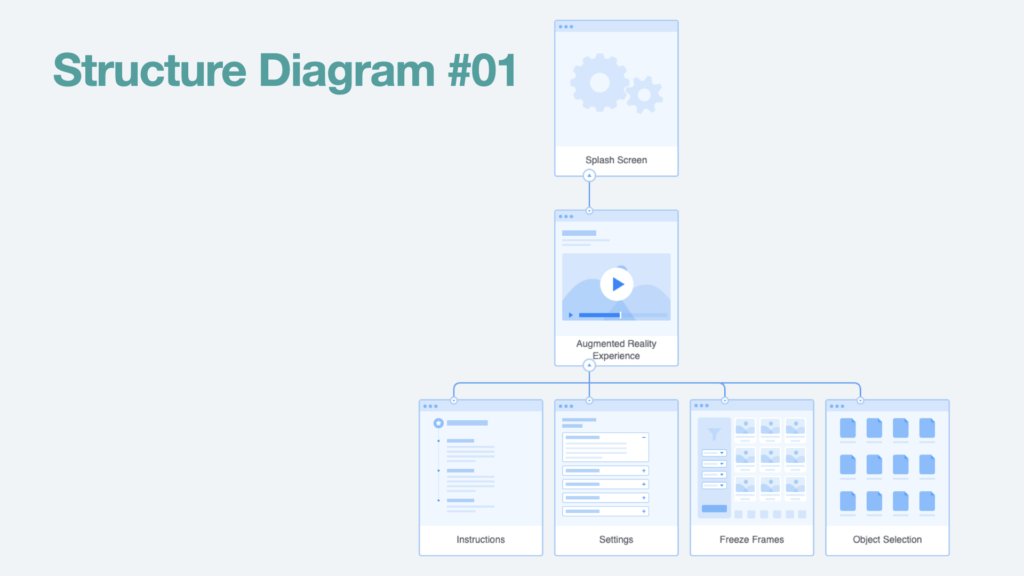

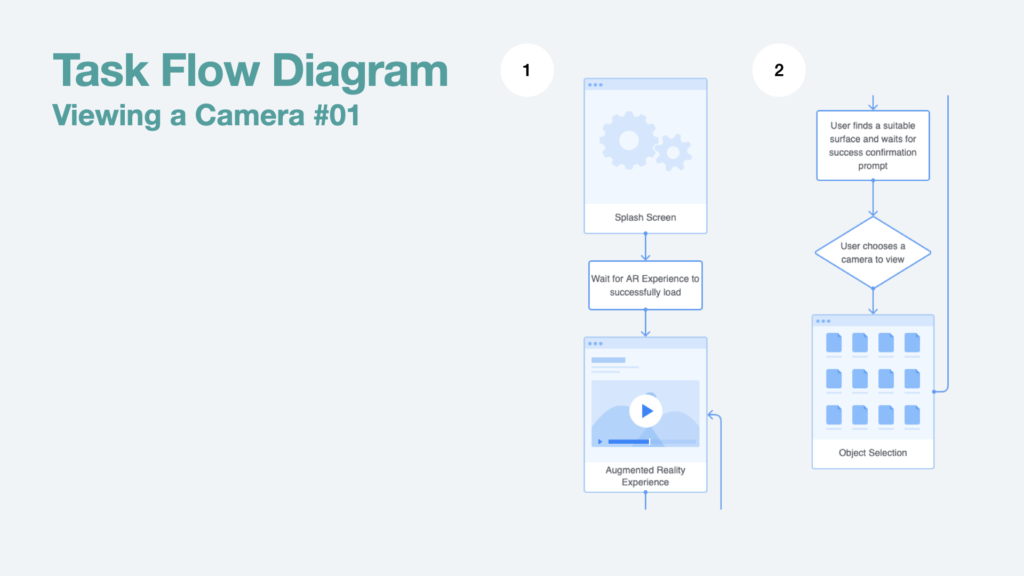

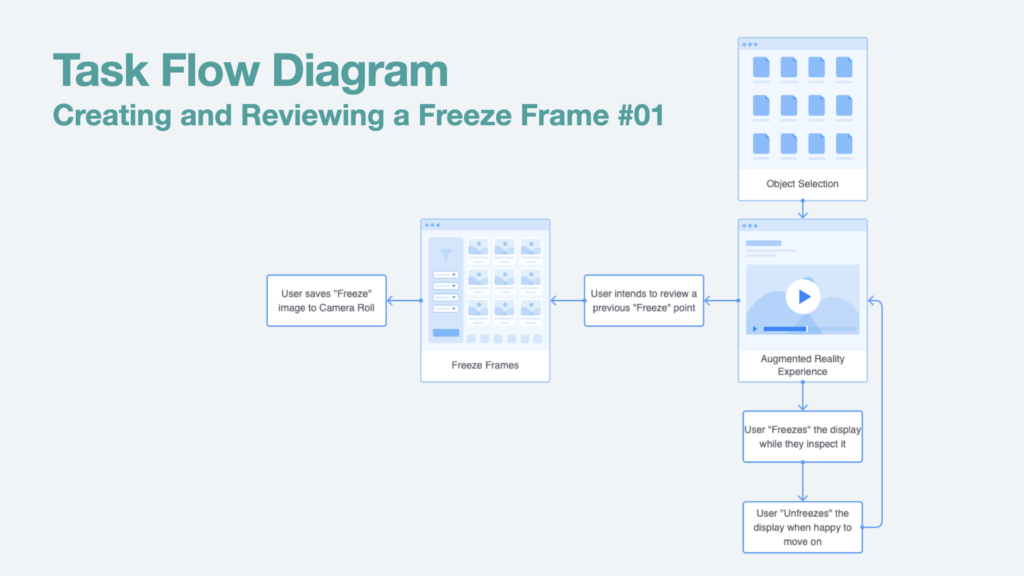

Further development from sketches and wireframes took the form of a Content Directory, Structure Diagram, and User Flow maps. Each of these documents help me formulate how the mobile applications features would be linked together across multiple screens, as well as how efficiently the user could access them.

AR Experience Production

To prototype the in-app AR experience, I knew I would need to create 3D virtual objects from a real camera body and camera lens. Initially, I had no idea how to achieve this in a realistic timeframe. I had limited experience of working in 3D software and knew I would not be able to build an object from scratch for this module. However I was aware that Apple had unveiled a new API as part of Xcode in Mac OS Monterey called ‘Object Capture,’ which put photogrammetry functionality into app-designers hands (Apple, n.d.).

I signed up to the Apple Developer programme and downloaded Xcode, then managed to implement Apple’s ‘HelloPhotogrammetry’ example code and produce 3D scans. After a few attempts, I arrived at fully rendered virtual versions of the scanned objects. Any ‘gaps’ in the scans could be filled by taking extra photos of those areas. These scans would be perfect for producing a proof of concept.

At this stage of the project I was aware that Adobe XD would not permit me to incorporate an AR experience into a prototype. The workaround for this would be to create videos that would simulate the AR experience for the sake of presenting to potential investors are stakeholders.

The below video documents the process of experimentation from initial 3D scans and photogrammetry, to placing the 3D virtual objects onto a table using Adobe After Effects, Cinema 4D Lite, and Apple’s Reality Composer. I found this process to be very challenging and time consuming, especially as I am not ‘au fait’ with working in 3D software. I have found that working in 3D is very different from within the two-dimensional design field that I’m accustomed to, such as photography and graphic design. Working with four program windows, covering the X, Y and Z axes was mind-boggling, but despite this I was able to achieve a suitable outcome from a prototype.

The amount of time required for me to produce a short 12 second video in Adobe After Effects and Cinema 4D Lite was well over two hours in render time alone, which was the largest drawback of the method. This issue is further amplified by need to create many iterations of the same video, as I adjust lighting, animations, and filming angles.

As the project progressed, I carried out a similar experiment using Apple’s Reality Composer software, which is built into Xcode. In this software I could construct AR experiences using the .usdz objects that I had already created via photogrammetry. My first attempt of this wasn’t very responsive due to the large file sizes of my photogrammetry scans. Once I had reduced the quality (and file sizes) of the scans, the outcome was much more malleable.

Medium Fidelity Prototyping and Usability Testing

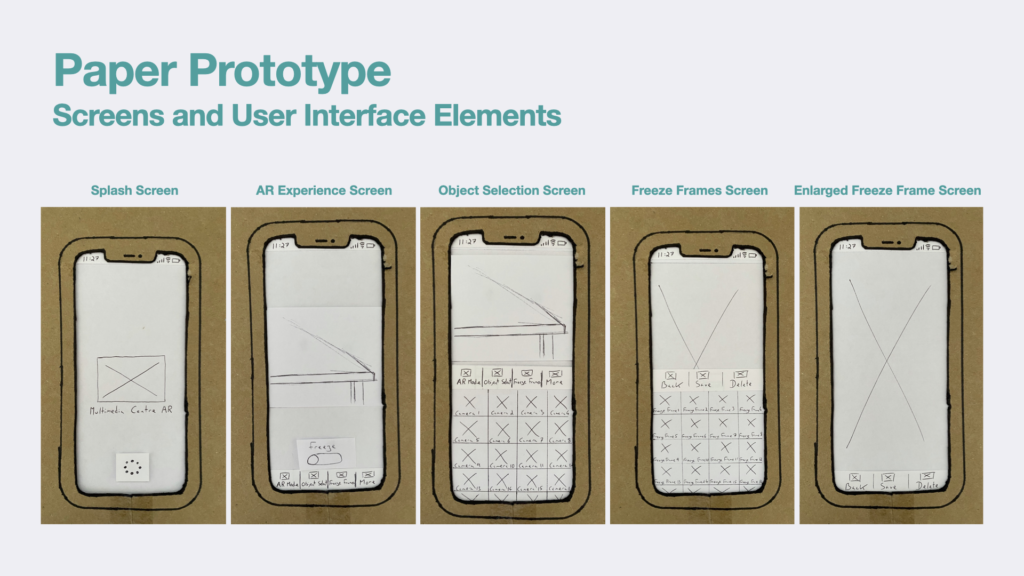

I began prototyping the mobile application by creating a low fidelity, paper prototype. This was a quick process making it easy to iterate upon and integrate feedback from usability tests (The Interaction Design Foundation, n.d.). Further to this, I feel that the unpolished finish of the paper prototype meant that my usability tester could feel comfortable criticising the prototype without offending me.

Each app screen was made from cut-outs of paper, with UI elements drawn using a Biro pen or a pencil. I didn’t spend much time thinking about the aesthetics of the application, as at this stage my priority was the wayfinding mechanics of the application. In the upcoming usability test, I would be asking myself the following questions:

- Can the user access basic functions of the application?

- Does the user show any negative behaviours when using the prototype, such as confusion, frustration, or being impatient?

- Does the user feel that user interface elements are placed correctly?

- Does the user make any mistakes when navigating?

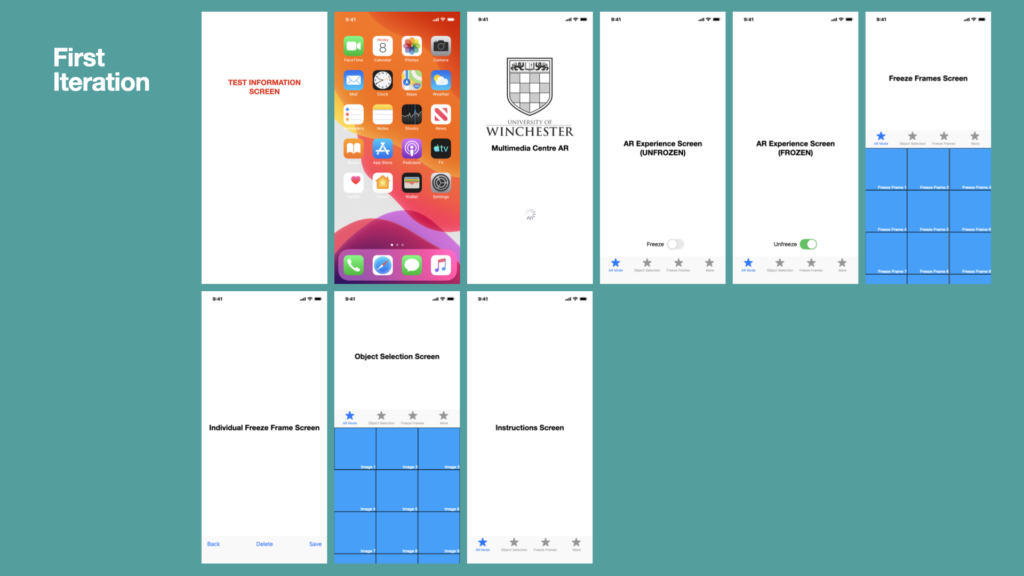

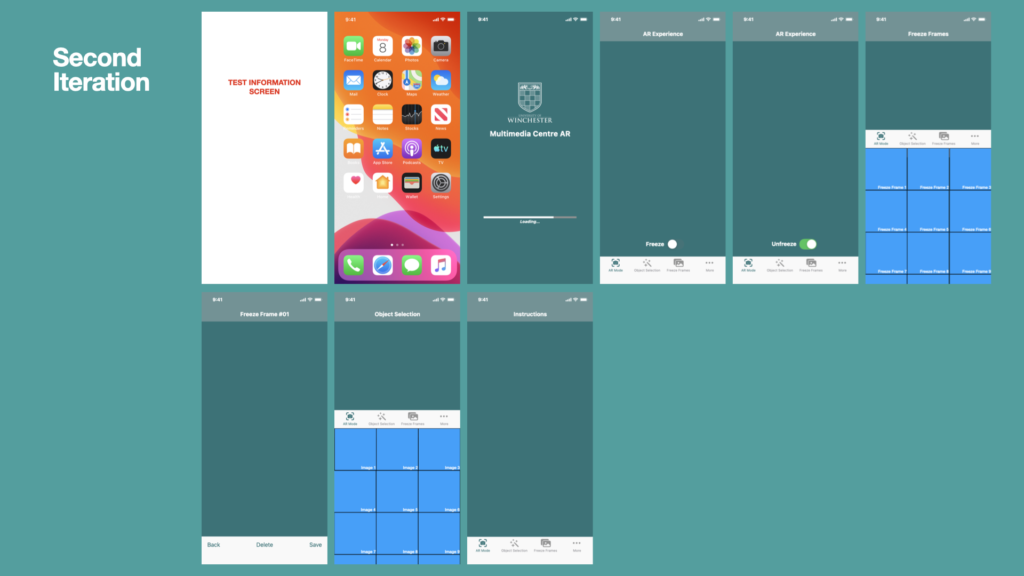

Once I felt confident with the wayfinding mechanics of the paper prototype, I was able to move on to developing a medium fidelity prototype using Apple’s Keynote software. This software was perfect for my requirements, as it allowed me to make use of Apple’s Human Interface Design Guidelines, complete with user interface elements that I could borrow (Apple, 2019). It would also be able to function on my phone for usability testing.

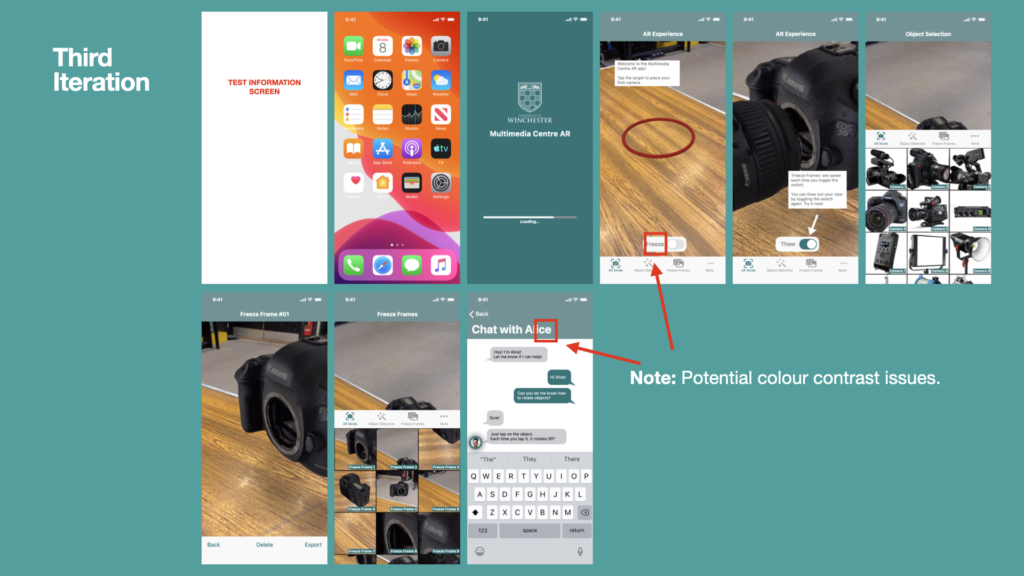

The development process took much longer to build, compared to a paper prototype, especially as I considered how the design could incorporate the University’s branding – colour palette, graphics, and typeface. I also developed the prototype so that it adhered to common practices of app design, such as the inclusion of a loading screen, tab bars, and a navigation bar. I employed a cyclical, iterative, method to producing the prototype, allowing myself time for reflection between each version.

In the final version of the medium fidelity prototype it was imperative to include hyperlinks between each screen and the tab bar elements. This allowed me to carry out a usability test with my own iPhone and the Keynote software built into it.

The second and third usability tests focused upon the tester’s experience of basic app functionality and accessibility functions. Each test revealed where the tester would become confused, or behaved in an unintended manner – these issues needed to be rectified to prevent them being distributed in a final-release candidate of the mobile application. You can watch each usability test in the videos below, and review each report/reflection in their respective blog posts.

High Fidelity Prototyping

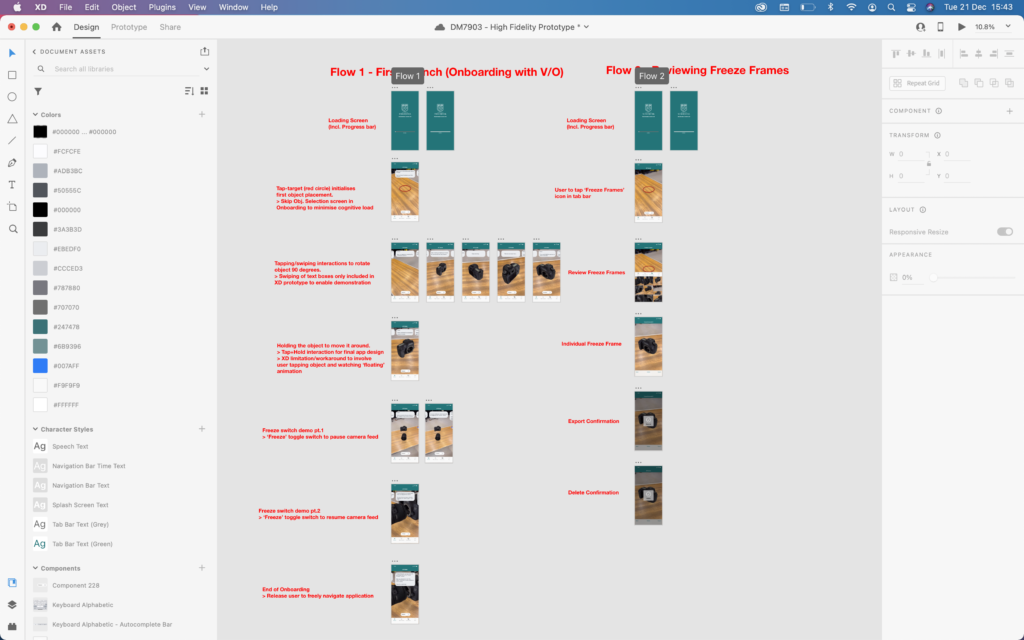

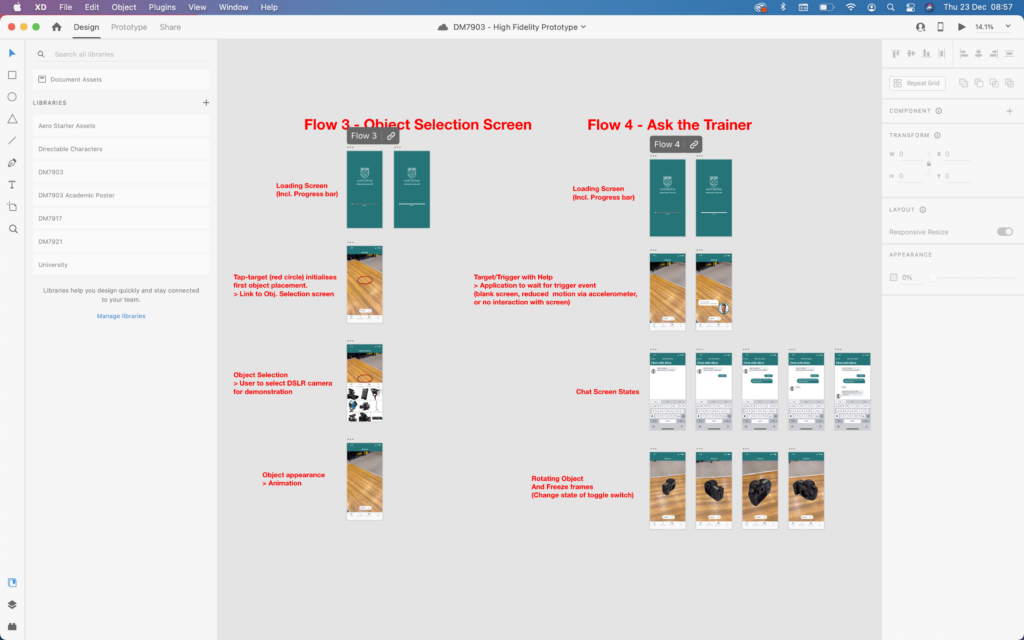

At the high-fidelity prototyping stage it became necessary for me to consider how the prototype would be experienced by investors and stakeholders. Knowing that the application included videos to simulate an AR experience, I made sure that each app screen and video was viewed in the correct order by building the prototype as four ‘Flows’ that the client could preview:

Flow 1 – Onboarding with VoiceOver, including Freeze Frame creation

Flow 2 – Reviewing Freeze Frames

Flow 3 – Object Selection Screen

Flow 4 – ‘Ask the Trainer’ Feature

This was a very different method of working for me, and I had to fight an inner design to create one large prototype, that could be navigated non-linearly.

I created a screen recording of myself accessing each of these flows, and would later compile this into my Proof of Concept presentation.I would also exhibit each of these flows for stakeholders to interact with on a dummy iPhone, after viewing my presentation.

Final Proof of Concept and Deliverables

To present a Proof of Concept for the Multimedia Centre Augmented Reality mobile application (‘MMC AR’ app, for short), I have produced a range of deliverables. These included:

- A 10 minute presentation to stakeholders

- A demonstration video of the four user-flows

- Links to the four user-flows, which would be exhibited on a dummy iPhone for hand-on interaction by stakeholders

Flow 1 – Onboarding with VoiceOver, including Freeze Frame creation:

https://xd.adobe.com/view/66c075fd-fc6d-4779-969a-efedc36c2600-3393/?fullscreen

Flow 2 – Review Freeze Frames:

https://xd.adobe.com/view/b381bac3-f6e5-4508-a812-20aff89dcdd9-e6d2/?fullscreen

Flow 3 – Object Selection Screen:

https://xd.adobe.com/view/bb19391e-bcd6-4b53-a2f9-d2370fc33ef4-ee4a/?fullscreen

Flow 4 – ‘Ask the Trainer’ demonstration:

https://xd.adobe.com/view/75f2879b-ae64-42d1-9637-bf59f2509110-20a1/?fullscreen

To finalise this project I have produced a recording of the 10 minute presentation that would be given to stakeholders, such the Multimedia Centre staff and the Director of IT. The presentation demonstrates the functionality of the application alongside accessibility features, funding opportunities, and development challenges. I have also taken the opportunity to explain how research in a prior module, DM7921: Design Research, has influenced the design process.

References

Andaluz, V., Mora-Aguilar, J., Sarzosa, D., Santana, J., Acosta, A. and Naranjo, C. (2019). Augmented Reality in Laboratory’s Instruments, Teaching and Interaction Learning. Augmented Reality, Virtual Reality, and Computer Graphics : 6th International Conference, [online] 11614. Available at: https://ebookcentral.proquest.com/lib/winchester/detail.action?pq-origsite=primo&docID=5923384#goto_toc [Accessed 30 Sep. 2021].

Apple (2019). Human Interface Guidelines. [online] Apple.com. Available at: https://developer.apple.com/design/human-interface-guidelines/ios/overview/themes/ [Accessed 6 Jul. 2021].

Apple (2020). Loading. [online] Apple.com. Available at: https://developer.apple.com/design/human-interface-guidelines/ios/app-architecture/loading/ [Accessed Oct. 2021].

Apple (n.d.). Introducing Object Capture. [online] Apple Developer. Available at: https://developer.apple.com/augmented-reality/object-capture/ [Accessed Oct. 2021].

Buxton, B. (2007). Sketching User Experiences : Getting the Design Right and the Right Design. San Francisco, CA: Elsevier.

Craig, A.B. (2013). Understanding Augmented Reality: Concepts and Applications. San Diego: Elsevier Science & Technology Books.

Helcoop, C. (2021). How Virtual Reality Could Transform the Training Provision for the TV Studio at the University of Winchester’s Multimedia Centre. The University of Winchester.

Herskovitz, J., Wu, J., White, S., Pavel, A., Reyes, G., Guo, A. and Bigham, J. (2020). Making Mobile Augmented Reality Applications Accessible. ASSETS ’20: International ACM SIGACCESS Conference on Computers and Accessibility. [online] Available at: https://dl.acm.org/doi/10.1145/3373625.3417006 [Accessed 26 Sep. 2021].

The Interaction Design Foundation. (n.d.). What Is Paper Prototyping? [online] Available at: https://www.interaction-design.org/literature/topics/paper-prototyping [Accessed 31 Aug. 2021].

United Nations and The Partnering Initiative (2020). Partnership Platforms for the Sustainable Development Goals: Learning from Practice. [online] United Nations Sustainable Development, p.31. Available at: https://sustainabledevelopment.un.org/content/documents/2699Platforms_for_Partnership_Report_v0.92.pdf [Accessed 22 Nov. 2021].

University of Winchester (n.d.). Our strategy. [online] University of Winchester. Available at: https://www.winchester.ac.uk/about-us/our-future/our-strategy/ [Accessed 1 Nov. 2021].

WebAIM (2012). WebAIM: Motor Disabilities – Types of Motor Disabilities. [online] Webaim.org. Available at: https://webaim.org/articles/motor/motordisabilities [Accessed 28 Oct. 2021].

Wynn, P. (2019). How to Improve Fine Motor Skills Affected by Neurologic Disorders. [online] Brainandlife.org. Available at: https://www.brainandlife.org/articles/a-loss-of-fine-motor-skills-is-a-common-symptom/ [Accessed 26 Oct. 2021].