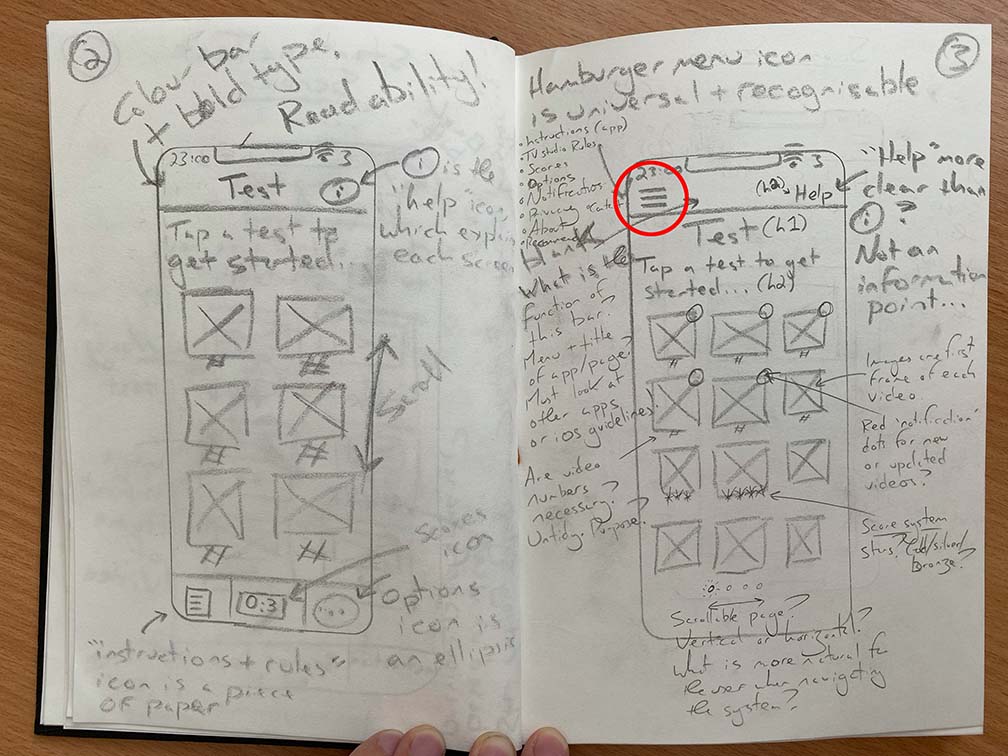

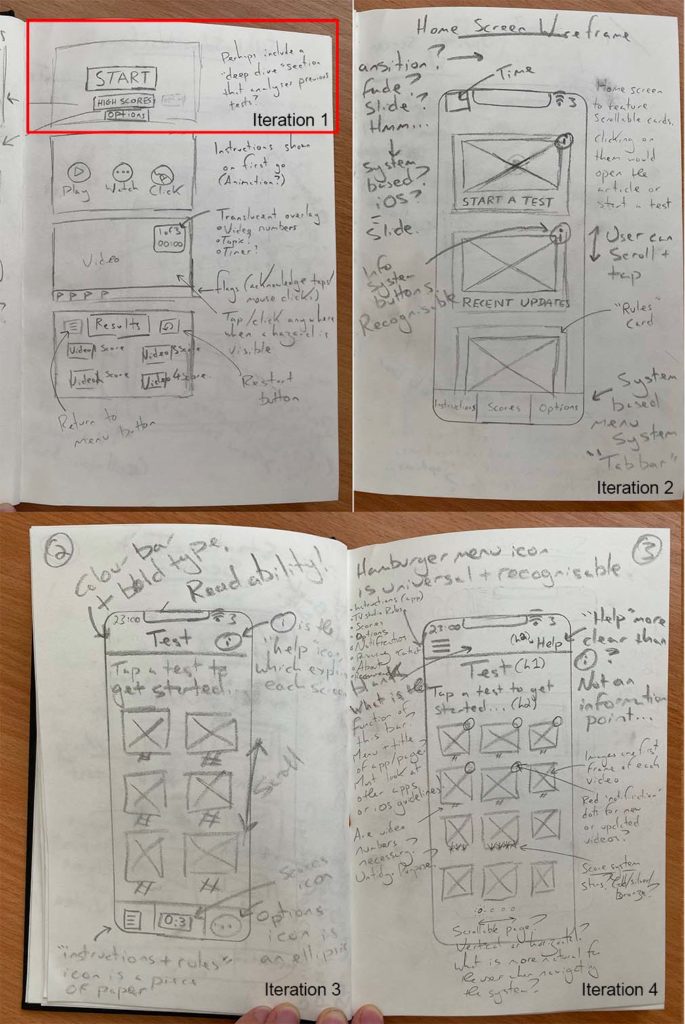

Prior to producing my first paper prototype, I have reviewed some of my initial wireframes, primarily to address the wayfinding mechanism. I was dissatisfied with having both a back button and a tab bar-it seemed to make more sense for me to use a hamburger menu as this would unify the functionality of both of them. The app itself has a flat information architecture (it doesn’t have many levels), and so all screens could be viewable in a hamburger menu without then need for the user to scroll. Pressing a back button would amount to the same outcome as pressing the hamburger menu and selecting the screen that the user would like to see. This is one more step/tap however it does remove the need for two user interface elements.

The other aspect of my wireframes that I wanted to revisit was the scrolling behaviour. In my last wireframes I decided to use vertical scrolling and stay consistent with this to not confuse the user. However I now understand that horizontal scrolling comes with a user expectation that it will reveal ‘stages’ rather than a long reveal one long screen/page. Provided that I use a page control element such as a scrolling indicator, uses should understand that they need to scroll horizontally rather than vertically, see the below quote from Apple’s Human Interface Design Guidelines:

“Consider showing a page control element when a scroll view is in paging mode. A page control shows how many pages, screens, or other chunks of content are available and indicates which one is currently visible. If you show a page control with a scroll view, disable the scrolling indicator on the same axis to avoid confusion.”

Source: https://developer.apple.com/design/human-interface-guidelines/ios/views/scroll-views/

Horizontal scrolling could be used on the “Start a Test” screen to divide tests into sections based upon the difficulty level, this is illustrated in Iteration 4. Scrolling behaviour should be an indicator that psychologically divides the tests into groups.

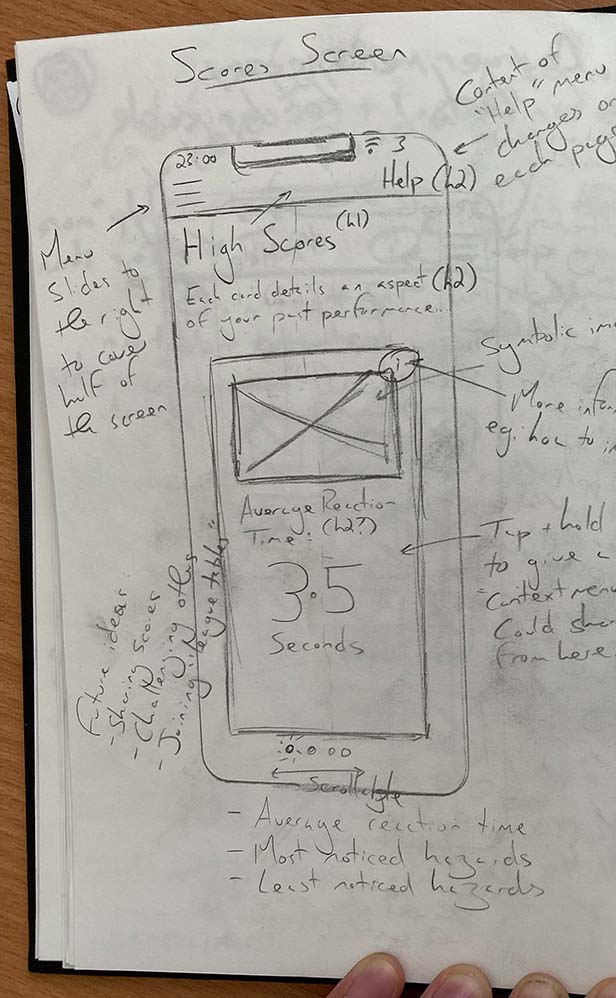

Horizontal scrolling could also be used on the Scores/Results screen to provide different metrics such as average reaction time, most noticed hazards, and least noticed hazards.

Final thought – Could the Instructions screen be presented in steps using the horizontal scrolling behaviour. A short amount of steps could be accompanied by a images in a process known as “Onboarding”. Onboarding presents snippets of information alongside minimalist imagery to present a fun, glance able and informative experience to help users understand a process.

Further information on Onboarding in iOS is available here: https://developer.apple.com/design/human-interface-guidelines/ios/app-architecture/onboarding/