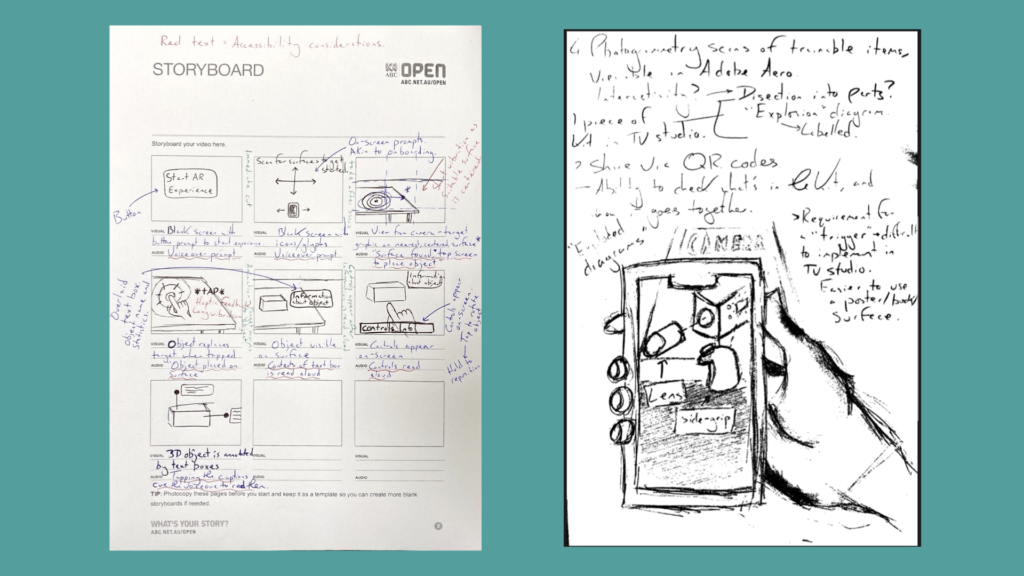

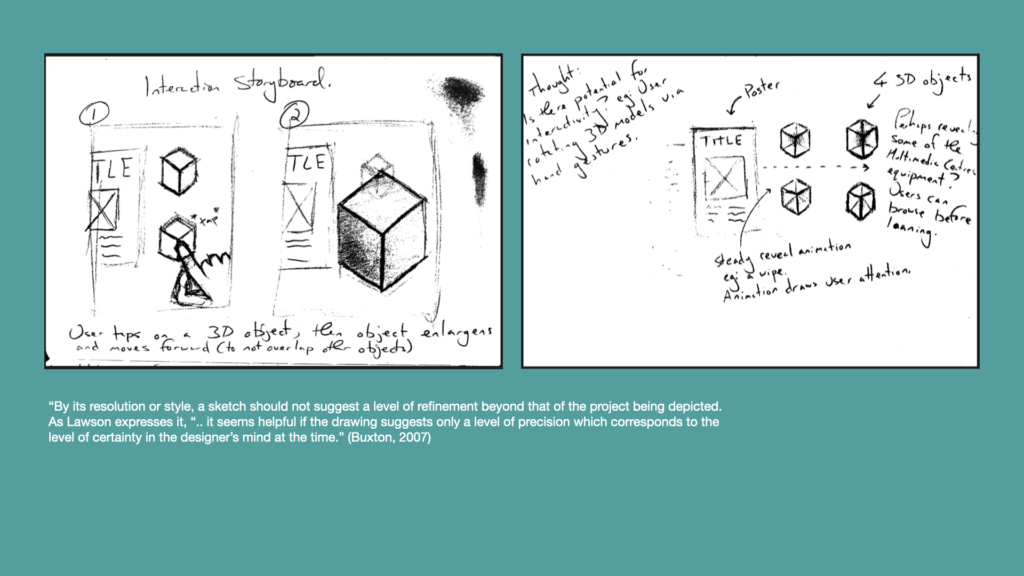

This week I’ve also started the visual design process by producing a range of sketches and storyboards. Aside from allowing me to think broadly and ideate through possible design options, these have provided a broad illustration of how I’d imagine a user would interact with the augmented reality experience. I’ve also been able to evidence some ideas of the functionality I’d expect to implement and expected user behaviour.

I’ve started the visual design process by producing a range of early sketches and storyboards. By no means do I expect to implement all features and functionality shown here – these are broad ideas that are not yet refined.

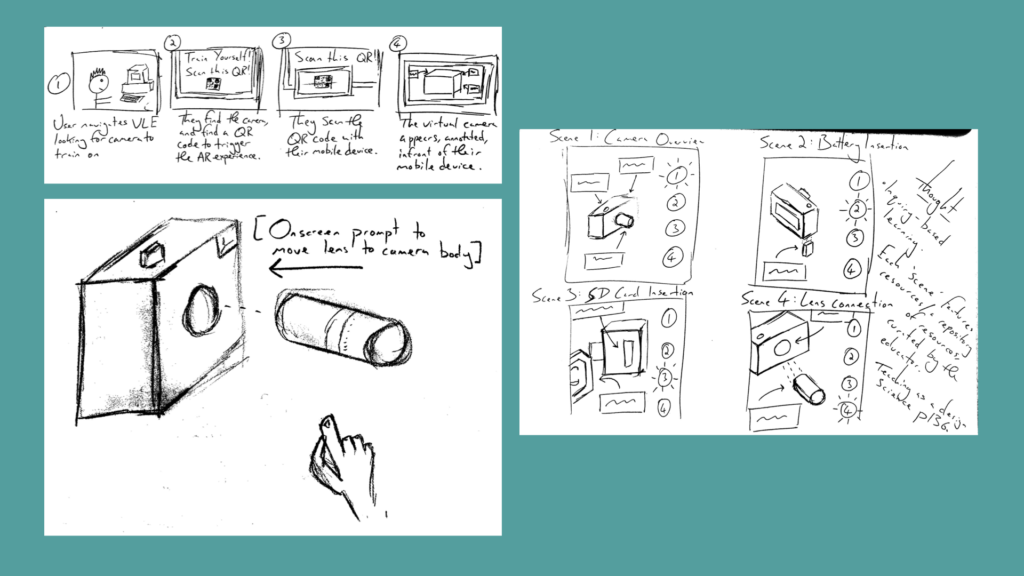

On the next few slides I’ve used found imagery to create montages that explain my ideas on how potential app functionality may work. I find this to be a quick method of conveying more refined ideas in an accurate manner, without the potential miscommunication or other questions arising from poorly drawn sketches!

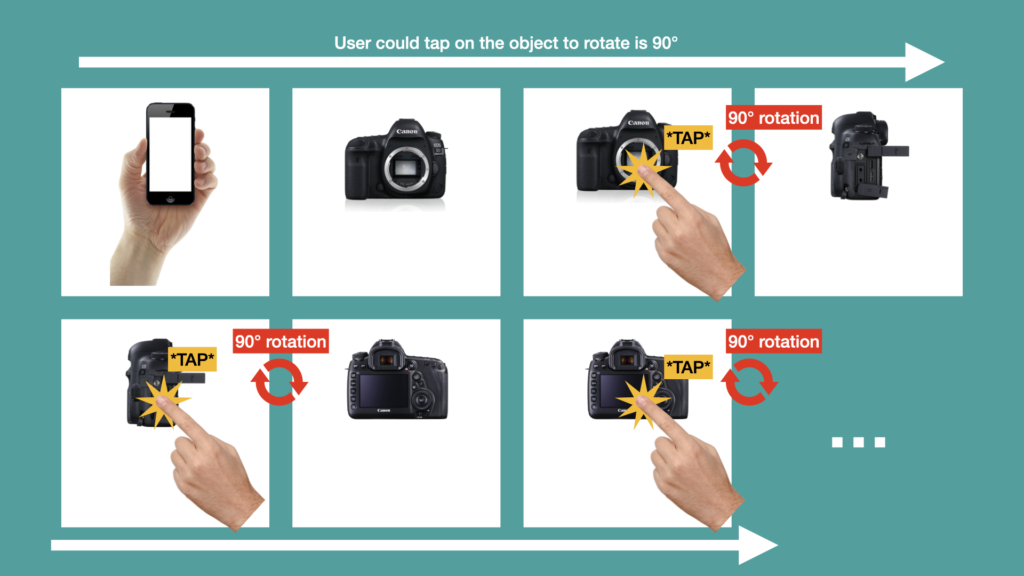

In the below screen I’ve depicted a storyboard of using a tap gesture to rotate the camera 90° with each tap. This will potentially reduce the need for swiping across interface which could be difficult for some users with motor impairments.

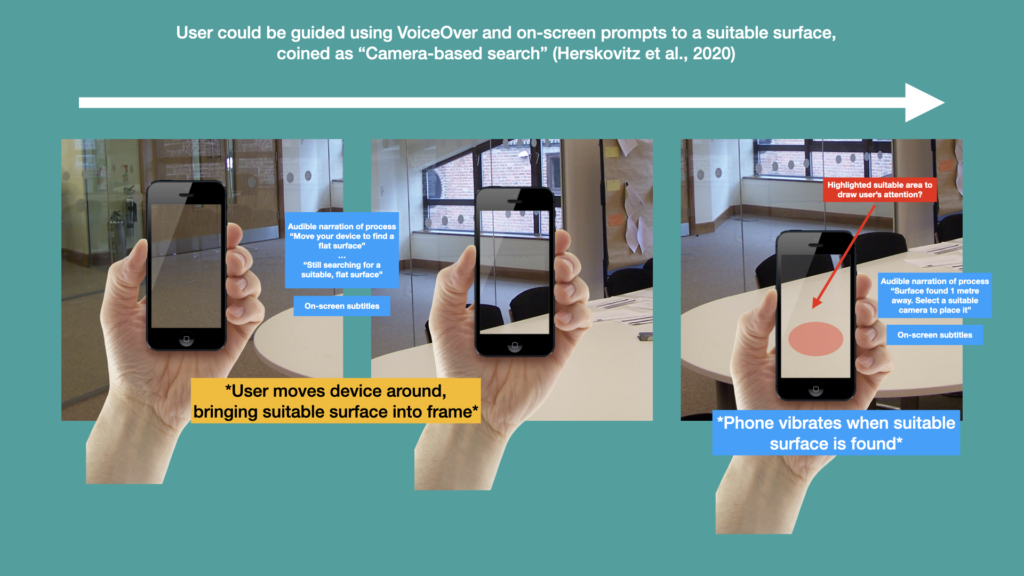

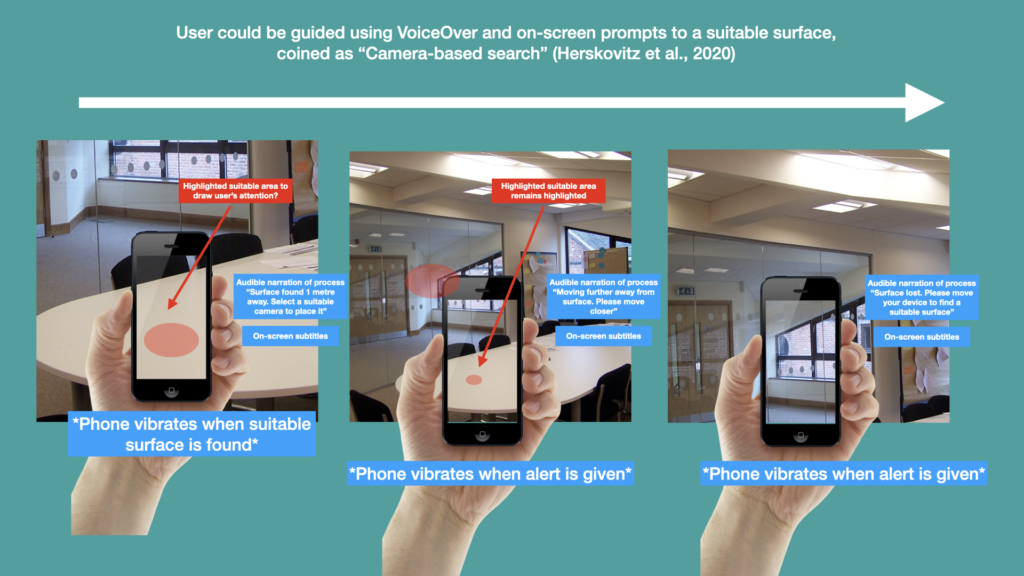

Below, I’ve documented my understanding of the camera–based search idea in Herskovitz et al’s research paper. The device will guide the user to finding a suitable place using both subtitles and VoiceOver accompanied by device vibrations. I am a little dubious about how well this will communicate with the user when multiple services have been found, but this is an issue that might need to be resolved later in the design process.

Another potential issue a camera-based search will be how the system responds when the user moves further away from the suitable surface. Will the device give audible/visual prompts alert the user about how far away they have moved? Will the device tell the user when they’ve lost sight of the suitable surface completely? Will phone vibrations be different depending on the message being communicated? Short vibrations for directions and along vibration for when the surface is lost completely? I don’t know, and these issues will need to be considered as the design process move forward if I implement this functionality.

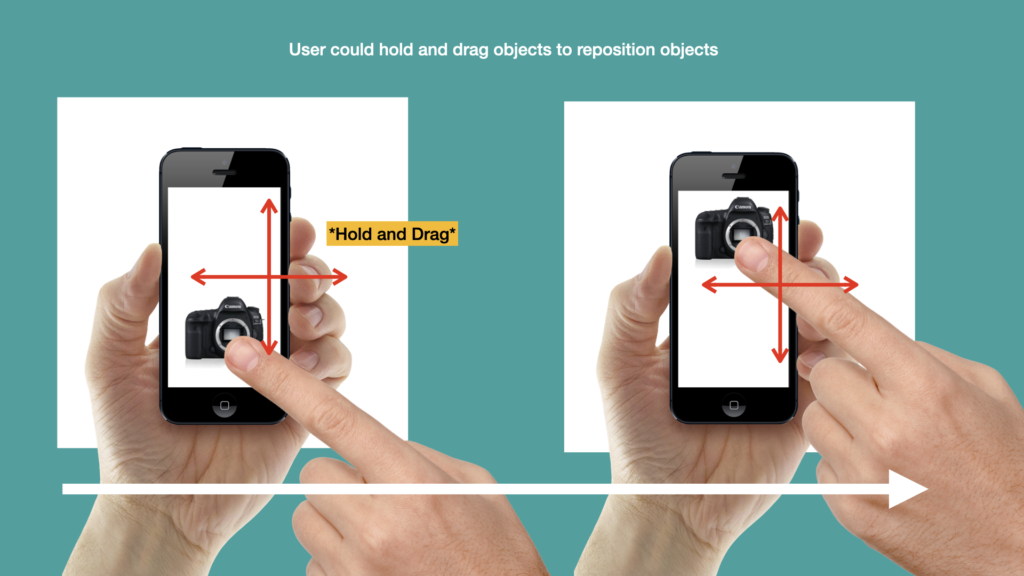

Below – Once the object has been placed, I would imagine that a hold-and-drag gesture could allow it to be moved around, however this would essentially involve the user swiping the object around the display – a task that some users with motor impairments may struggle with…

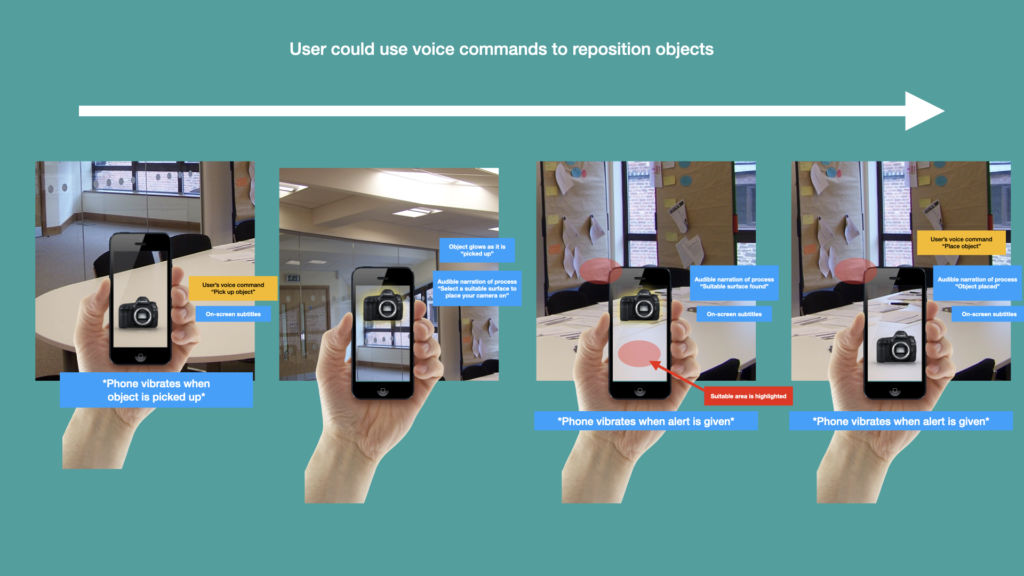

…Instead, it might be achievable to use voice commands. The user could be enabled to pick up the object with one voice command, then move around with their device, putting another another suitable surface in the frame, and then use another voice command to set the object down. See below…

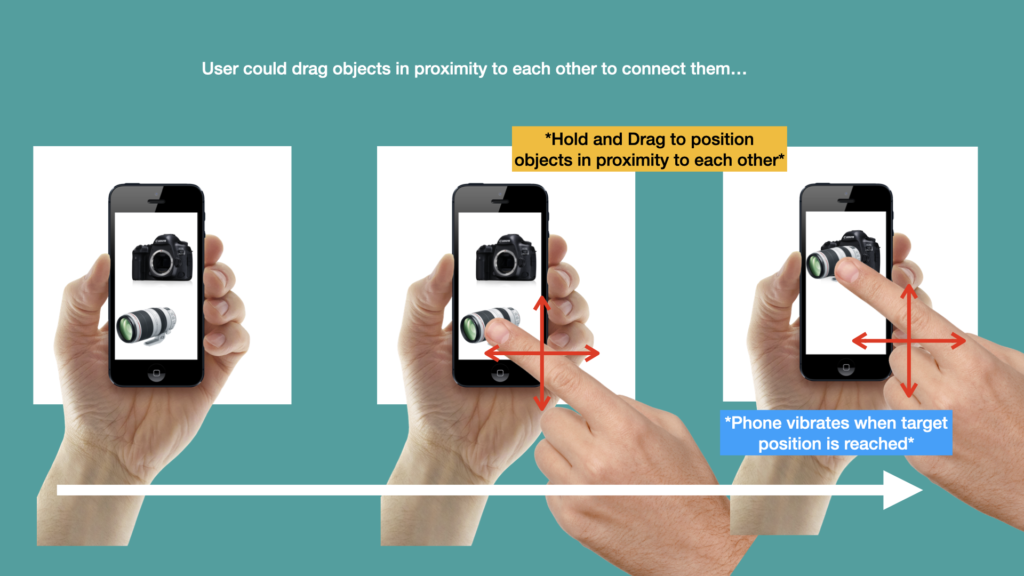

I had another idea involving swipe gestures which would allow users to move objects together in order to learn how they would connect and the routines of building cameras ‘setups’. A simple example is given on the slide whereby a user can hold and drag a camera lens to the mount on the camera body. As explained previously this could be problematic for users with motor impairments…

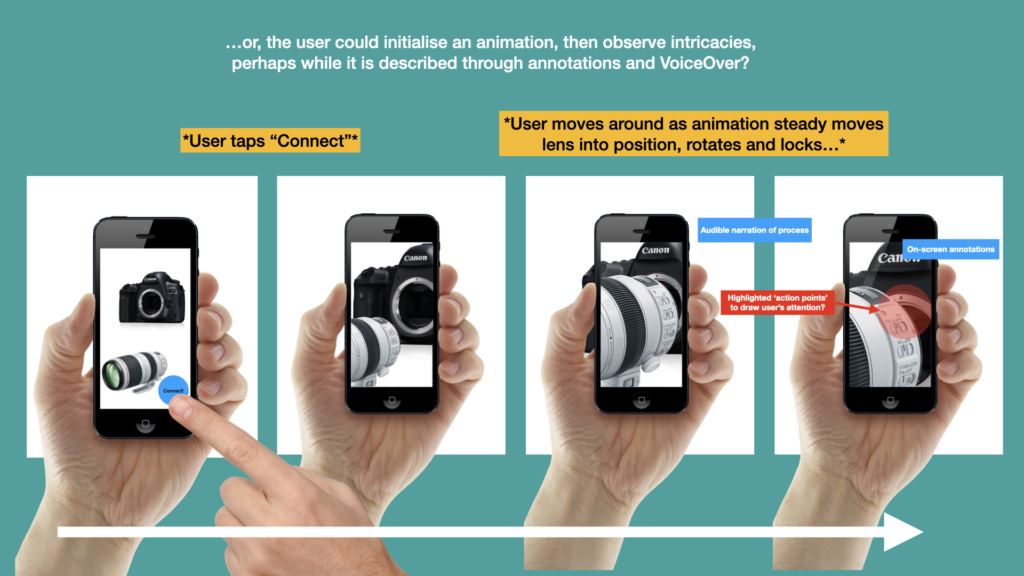

…a solution to this could be to create scenarios. In this scenario above the user will press the connect button to begin the animation. They could then move around the camera and the lens as they watch them steadily come together and connect. A really good reason for doing this would be that the users can observe key moments in the animation, which could also be highlight in some way, such as a glowing red orb around ‘action points’. Although this might not be considered active learning I do think that given the small screen real estate of mobile devices that have been more important they see the important aspects of animation rather than using the finger to drag objects together which doesn’t really wear much resemblance to the physicality of connecting a lens with a camera body. So all in all I see more value in this method.

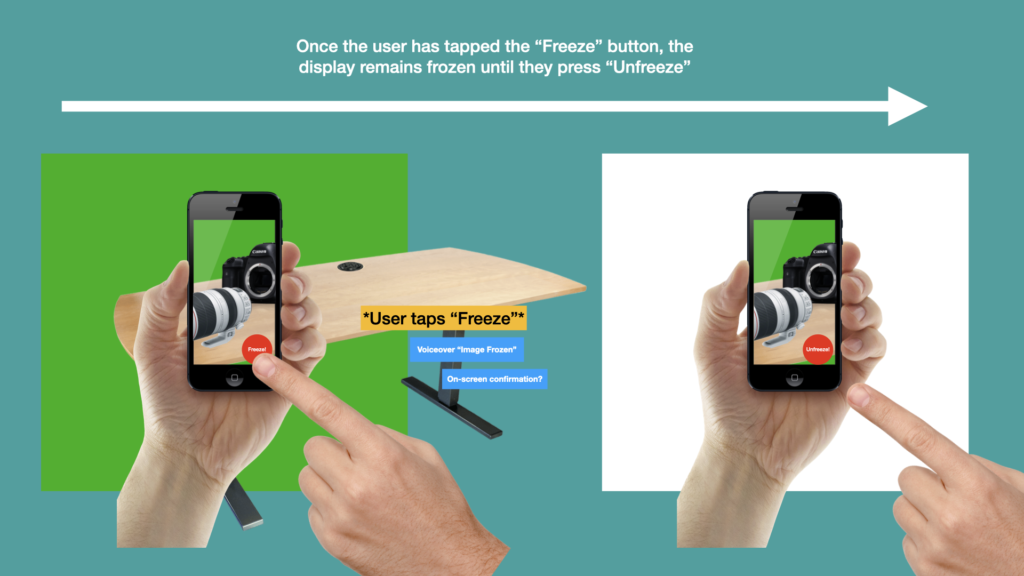

As below, the Freeze feature could also work in a very similar way. A button would enable the freezing feature which would keep everything on screen the same until the user presses the unfreeze button. This might allow them to temporarily freeze the image while they sit down and observe what is on the screen. The image will also be stored for later in case they want to refer back to it in their studies.