This week I filmed some test footage for experimenting with with photogrammetry scans that I’ve created using Apple’s Object Capture API. The footage was filmed in a classroom and depicted the camera panning across the room and then lingering on an empty table, steadily rotating and zooming in on the flat table surface. When filming, my thoughts were that if I placed a virtual object such as a camera on that table, I could give the illusion that I was purposefully getting a better look at the camera’s interface by moving around it.

In the following explanation, I follow some of the processes outlined in this tutorial: https://helpx.adobe.com/after-effects/how-to/insert-objects-after-effects.html

Having filmed some test video footage, I could proceed with initial experiments, starting with Adobe After Effects. As was not looking to create an interactive version of the prototype, but rather a version that I could present to potential investors and stakeholders, I only needed to create video footage that looked like the augmented reality experience.

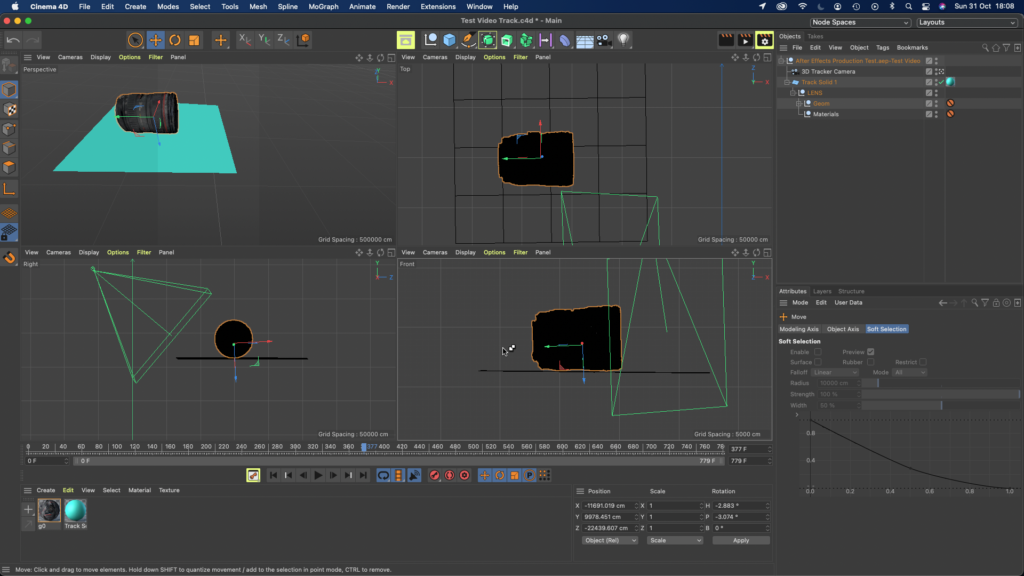

The below example evidences an experiment whereby I placed my photogrammetry scan of a lens onto a table using a combination of Adobe After Effects and Cinema 4D Lite. I found this process to be very difficult, as I am not au fait with working in 3D software – it is far from the two-dimensional, design field that I’m used to working within, such as photography and graphic design. Working with four program windows, covering the X, Y and Z axes, was challenging, however I feel I was able to use the effectively to create a rudimental example of my vision.

The amount of time required for me to produce this video was easily the largest drawback of the method. The rendering time for this 25 second video was well over one hour. Although this doesn’t sound like much, it’s a lot when you consider the need for iterations and further tweaks.

I carried out a similar experiment using Apple’s Reality Composer software, which is built into Xcode. In this software I could construct AR experiences using the .usdz objects that I had create via photogrammetry. My first attempt of this wasn’t very responsive, potentially due to the large file sizes of my photogrammetry scans. Once I had reduced the quality (and file sizes) of the scans, the outcome was much more responsive. I’ll post a blog post about this shortly…