To ensure that I’m achieving the DM7903: Design Practice Learning Outcomes, I’ve decided to consider my project from the perspective of the United Nations’ Sustainable Development Goal 17: Partnership for the Goals.

Throughout my time on the MA Digital Media Practice course, I’ve established a partnership between myself (acting as an App Designer and Media Trainer) and the University of Winchester, with the primary goal of increasing student and staff access to technology in the University’s Multimedia Centre (MMC). By establishing this partnership with the University, I have demonstrated an awareness of an entity that consists of like-minded individuals, with whom I can build a working relationship (United Nations and The Partnering Initiative, 2020, p. 31). In collaborating with the MMC team, I have consequently been able to enquire about the needs of their students (e.g.: the research in my DM7921: Design Research module), and find opportunities for innovative projects such as my design work in DM7917 and DM7903 (United Nations and The Partnering Initiative, 2020, p. 31).

This approach of aligning with a team and actioning innovative design ideas is an important one, which may epitomise the goal of partnering to achieve the UN Sustainability Goals.

As the University is looking to grow throughout the next decade as part of its ‘smart growth target’, it may need innovative solutions that are scalable. I understand that the University is looking to expand its existing courses, teaching many more students, potentially placing strain upon areas such as the training provision in the MMC (The University of Winchester, 2021). This expansion may also increase the demands for media equipment as students continue to work towards meeting their module assessments.

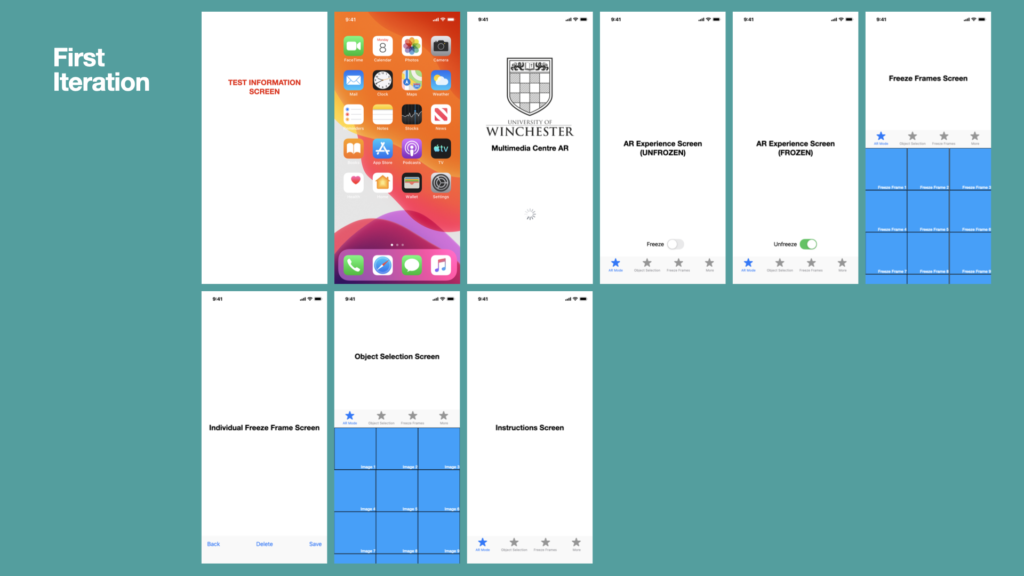

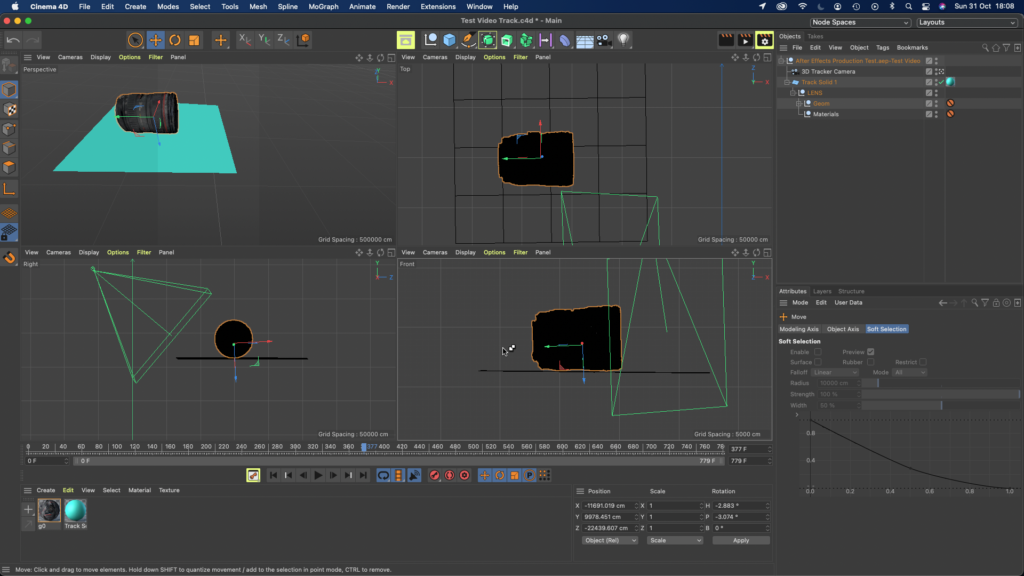

In this module, I am developing a proof-of-concept for an AR-based training provision, which has potential to be scalable as training demands grow. A mobile app would be a good distribution method for this AR experience, as it could be downloaded for free by many users across both iOS and Android platforms, then used as a training resource at any place and any time. Trainees would be permitted to train both remotely and asynchronously, reducing the need for students and staff to travel from training.

The change in training provision and requirement of remote learning would take some time to be fully adopted. During this time, I would see the University as an ‘enabler… providing the space and time for the platform to develop’ (United Nations and The Partnering Initiative, 2020, p. 28).

The positive environmental impact of removing the need for on-campus attendance in training sessions would help the University on its journey to becoming carbon neutral, and address UN Development Goal 13 ‘Take urgent action to combat climate change and its impacts’ (United Nations, 2021). This may also reduce the capacity pressures of the University’s estate, as increasing capacity demands could be offset by training being delivered remotely.

However, one limitation of the Multimedia Centre AR application is that there are currently no plans to include any assessment tasks. This means that trainees’ competency with MMC technology cannot be assessed remotely or asyncronously. This limitation could be overcome by a mobile application such as the prototype I designed for the DM7917: Emerging Media project, which featured ‘hazard perception’-style assessments and reaction-time testing (Helcoop, 2021).

As well as increasing access to technology for its students, the AR-based training app could enable further knowledge sharing within the wider community, including providing support for commercial use of the University’s media equipment, even outside of office hours. An example of this would be a school whose staff book one of the University’s auditoriums and related equipment to host and record a nativity play. The app would enable the school’s staff to train themselves on the required equipment in advance of the booking date.

Furthermore, there is also potential for this AR-based training provision to be replicated by other universities and science/technology-based industries, as the UN strives for a common agenda on partnerships both nationally and globally (United Nations and The Partnering Initiative, 2020, p. 15).

References

Helcoop, C. (2021). DM7917 Emerging Media Student Directed Project. [online] Christopher Helcoop Portfolio. Available at: https://christopherhelcoop.winchesterdigital.co.uk/index.php/dm7917/ [Accessed 20 Nov. 2021].

United Nations (2021). Goal 13 | Department of Economic and Social Affairs. [online] United Nations Sustainable Development. Available at: https://sdgs.un.org/goals/goal13 [Accessed 23 Nov. 2021].

United Nations and The Partnering Initiative (2020). Partnership Platforms for the Sustainable Development Goals: Learning from Practice. [online] United Nations Sustainable Development. Available at: https://sustainabledevelopment.un.org/content/documents/2699Platforms_for_Partnership_Report_v0.92.pdf [Accessed 22 Nov. 2021].

The University of Winchester (2021). Staff Open Meeting with ELT.