This week I wasn’t able to attend the critique in lecture due to a work commitment. However to make amends I decided to take stock of my progress with my project.

I’m in a good position so far – I’ve completed some research into Augmented Reality and it use in Higher Education/Teaching, including gaining some ideas on how accessibility issues can be approached.

I was particularly interested in how Augmented Reality experiences could be responsive based upon “spatial, temporal or spectral features”. In their research paper, Faller et al. describe “Steady-State Visual Evoked Potential” (SSVEP for short), referencing the technology’s ability to respond to visual cues, such as recognising an object and its relationship in space to the user. This is further relayed by the phrase “brain-computer interface” (BCI), whereby there is constant communication between the user and the computer while the experience is taking place.

The navigation activity in the study involves the BCI responding mostly to the evolving scenario around the user, while they navigate the course; I can imagine a constant feedback loop, whereby the user reacts to the information given by the experience, then the experience (and information within it) updates based upon the user’s movements (and relationship with the obstacle course around them); in essence I think this epitomises what’s meant by a brain-computer interface, and is a fundamental example of how AR experiences can provide useful functionality for users.

Regarding accessibility, Faller et al. make comparisons between virtual reality and augmented reality, and I can see how an augmented reality experience could present more challenges compared to a virtual reality counterpart. The colour contrast between on-screen graphics and the background would be a particularly challenging aspect; in virtual reality the developer can have a larger degree of control over the placement of colour and light within the experience, however this is more difficult in augmented reality, because the user can initialise the experience in many real world scenarios where lighting, the colour of decor, and other aspects could make on-screen graphics more difficult to see. The idea of having “background sensitive adjustment of contrast”, as written in this paper, is an interesting one that I could implement into my experience in this module.

Another good example of the relationship between accessibility and AR would be the “Freeze Frame” functionality, coined by Herskovitz et al., which could be particularly useful for users with motor impairments. Herskovitz et al. found that “Aiming cameras non-visually is, in general, known to be a hard problem, and we found that the difficulty is only magnified when the position must be held stable while also interacting with the mobile device”. My thoughts on the “Freeze” ability would be to implement a toggle switch into the AR app’s user interface, enabling the user to freeze their device’s display to see a clear review of the augmented reality simulation.

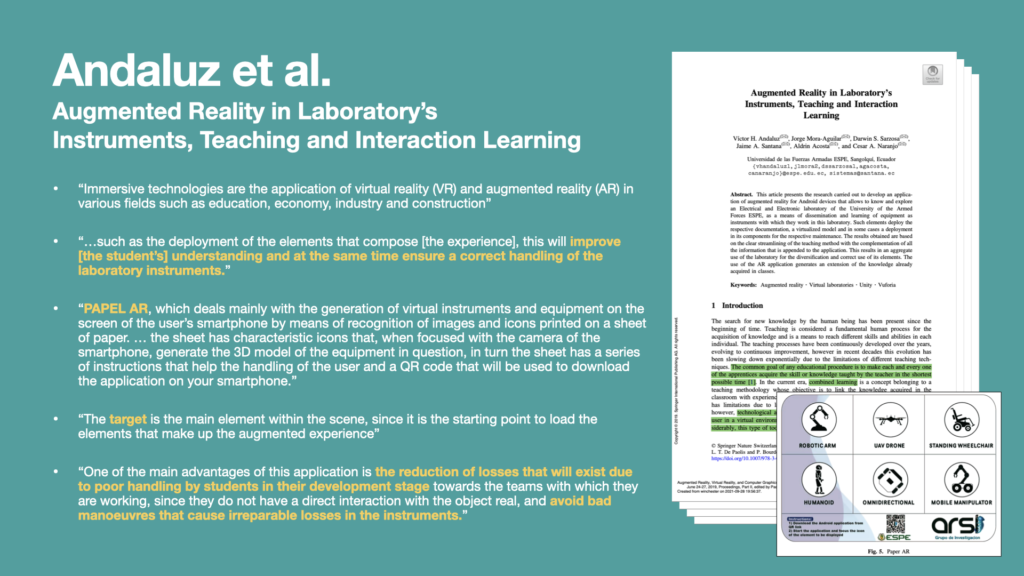

Another research paper, by Andaluz et al. provided some insight into how augmented reality is being used in the Higher Education sector to teach students on how to use laboratory instruments. In their research, Analuz et al.’s AR experience was triggered by the user aiming their mobile phone camera over a paper target. The example given was a piece of paper with six targets, which was provided to the students. When their camera phone was aimed at the target, a virtual laboratory instrument appeared in front of them. All instructions were distributed via a QR code on the paper target, which students could scan using the same smart phone.

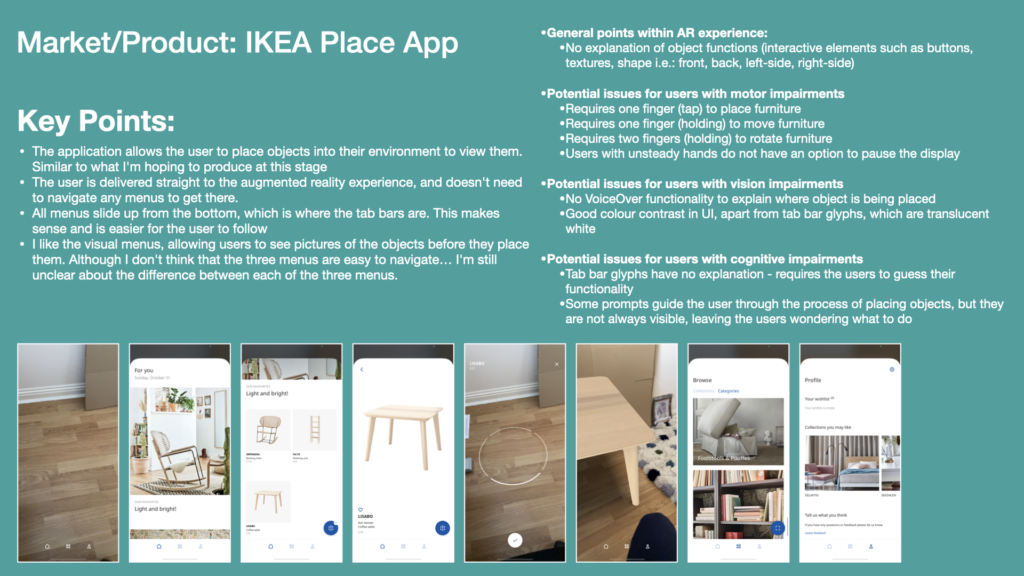

In my project I have a choice on whether or not to require a target to be used to initiate the augmented reality experience. I am already aware of mobile apps such as IKEA’s “Place” app, which doesn’t require a target, but instead requires the user to suggest a flat, horizontal plane for the virtual object to appear on – confirming by tapping their mobile phone’s screen. I suppose that the paper version could be seen as more accessible to users with particular cognitive and maybe vision impairments, as they would not need to interact with the user interface on the mobile device as much, as paper allows for a reasonably seamless blending of physical and virtual elements.

Andaluz et al. offer interesting justifications for pursuing this technology in laboratory based scenarios, which I believe could be transferable to the training of equipment within the Multimedia Centre at the University of Winchester. Enabling students to explore virtual versions of instruments and equipment could reduce the amount of losses and breakages caused by poor handling of them, and even avoid irreparable losses due to students learning bad practices; a good example of an existing issue is students breaking lenses by forcibly removing them without knowing the correct method for doing so.

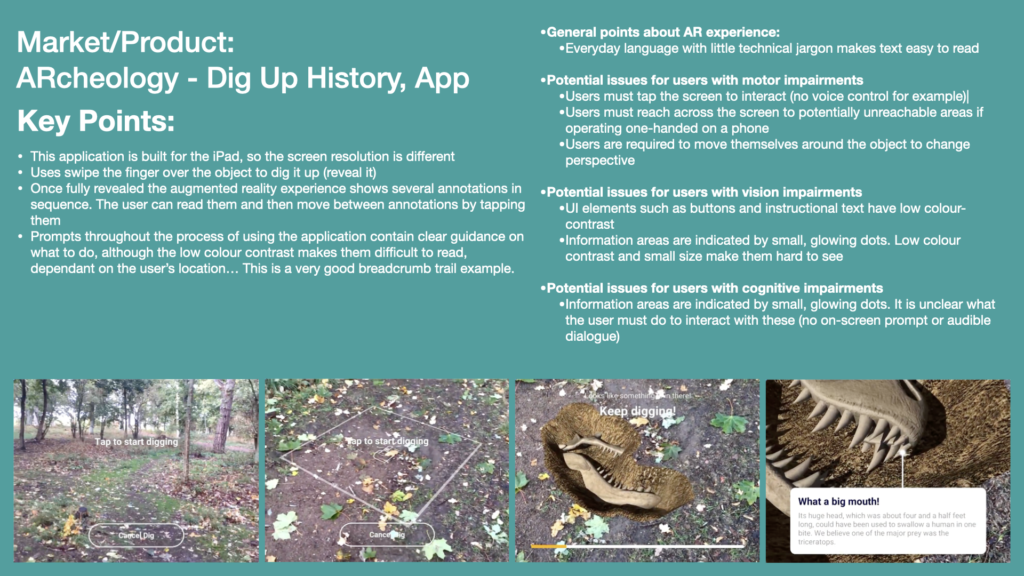

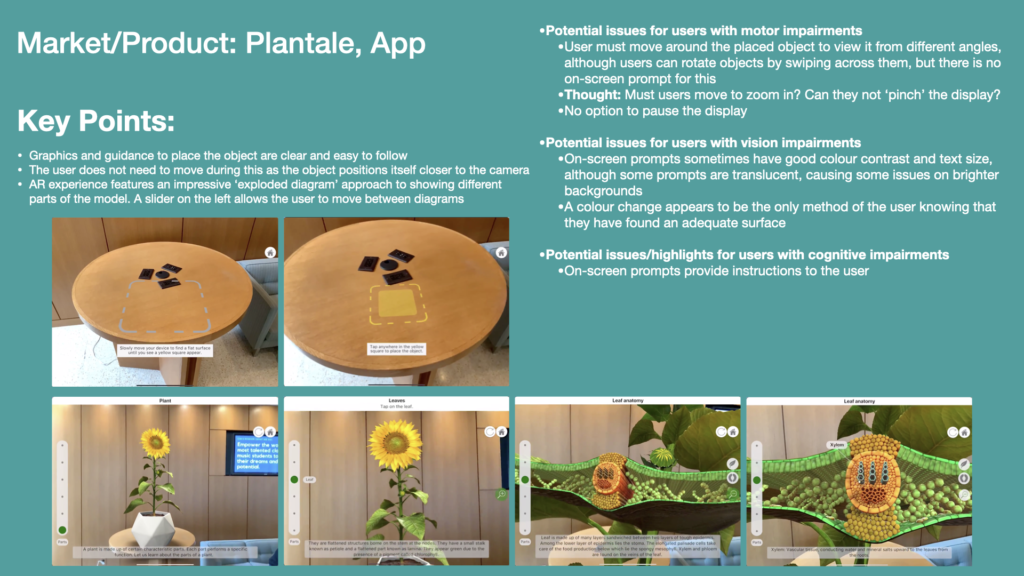

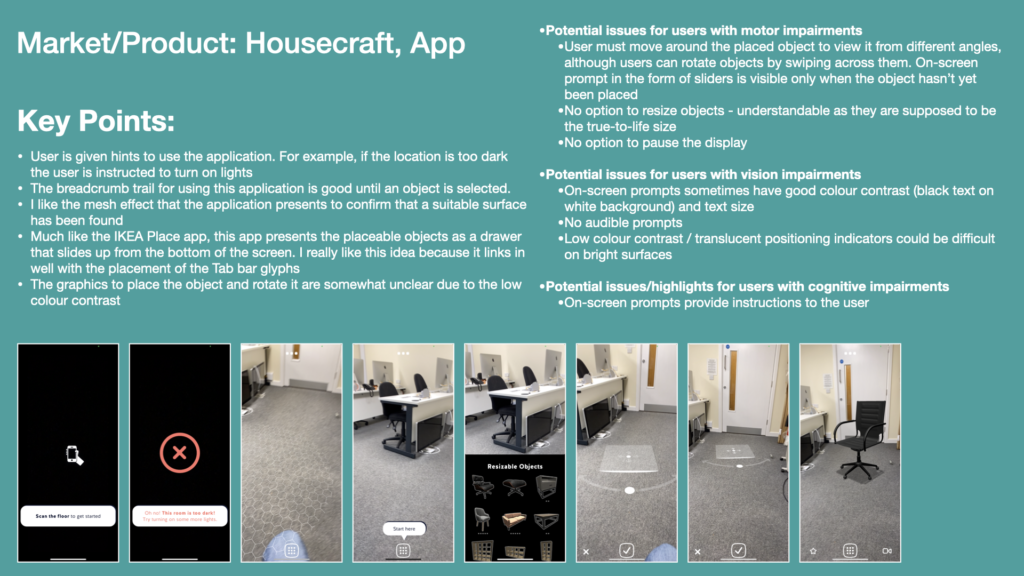

I’ve also completed some product research on existing augmented reality applications in Apple’s App Store. This has been pretty useful as I could point out shortcomings in other developers’ approaches to accessibility. I will attempt to remedy some of these shortcomings in producing a proof of concept for my own application.

From my research into existing AR applications, I have concluded:

- The wide variety of environments that users can experience augmented reality in can present colour-contrast issues with on-screen buttons and instructions

- Interaction with these applications is mainly through tapping and swiping

- I found no evidence of applications allowing users voice control abilities – it is unclear how users who cannot use physical gestures could use these applications

- Most applications do not provide VoiceOver for written information. The users must read instructions and annotations from the screen. This is made increasingly difficult when colour contrast issues are present

- Menus which originate from the tab bar (such as drawers) are a common trait of these applications

- Menus buttons tend to be placed in the lower portion of the screen, as when placed else where this can present difficulties for users with vision and motor impairments

- Be careful when allowing users to place large objects, as they will need to move around them

- Some applications aim to keep terminology simple and every day, increasing understanding for users and reducing barriers for users with cognitive impairments

- Some applications allow users to progress time on their own accord. For example, a slider down one side of the screen that can be used to progress and exploded diagram. Alternatively users can tap the way between annotations of the virtual object

References

- Andaluz, V., Mora-Aguilar, J., Sarzosa, D., Santana, J., Acosta, A. and Naranjo, C. (2019). Augmented Reality in Laboratory’s Instruments, Teaching and Interaction Learning. Augmented Reality, Virtual Reality, and Computer Graphics : 6th International Conference, [online] 11614. Available at: https://ebookcentral.proquest.com/lib/winchester/detail.action?pq-origsite=primo&docID=5923384#goto_toc [Accessed 30 Sep. 2021].

- Faller, J., Allison, B.Z., Brunner, C., Scherer, R., Schmalsteig, D., Pfurtscheller, G. and Neuper, C. (2017). A Feasibility Study on SSVEP-based Interaction with Motivating and Immersive Virtual and Augmented Reality. eprint arXiv:1701.03981. [online] Available at: https://arxiv.org/abs/1701.03981 [Accessed 25 Sep. 2021].

- Herskovitz, J., Wu, J., White, S., Pavel, A., Reyes, G., Guo, A. and Bigham, J. (2020). Making Mobile Augmented Reality Applications Accessible. ASSETS ’20: International ACM SIGACCESS Conference on Computers and Accessibility. [online] Available at: https://dl.acm.org/doi/10.1145/3373625.3417006 [Accessed 26 Sep. 2021].